What if a robot could truly see—not just detect obstacles, but understand a room, track motion, and even read your facial expression? For decades, humanoid robots have moved through environments guided by scripted paths and blind sensors. But that gap between sensing and perceiving is beginning to close.

Thanks to the integration of AI-powered eyes, robots are starting to see in a way that’s not just functional, but intelligent. The difference isn’t just in upgraded hardware—it’s in the software that interprets the world around us. This evolution pushes robots out of the realm of programmable puppets and into something far more dynamic.

The Fusion of Sight and Intelligence

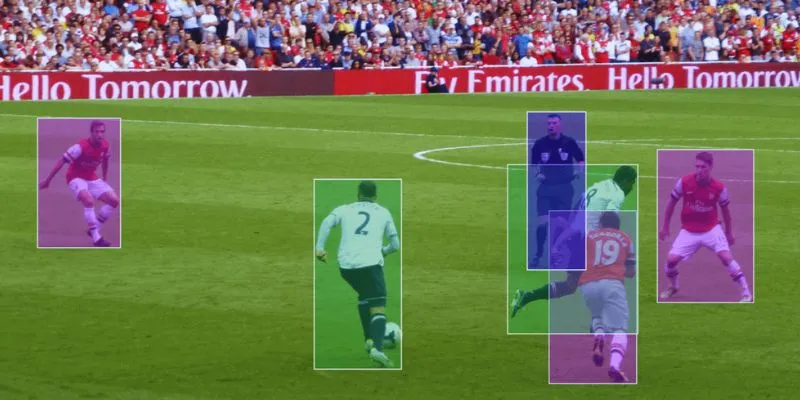

For a robot to “see,” two things need to happen: capture and comprehension. Until recently, robots relied on simple cameras or depth sensors that detected distance or basic motion. It was the visual equivalent of tunnel vision. Now, with AI-powered eyes, a humanoid robot doesn’t just gather pixels—it interprets them. These systems use advanced computer vision models trained on millions of images and real-world environments. The result is real-time recognition of objects, gestures, and faces, layered with an understanding of context.

Consider how you recognize a chair. You don’t just see four legs and a flat surface—you know it’s for sitting, you intuit where its space begins and ends, and you avoid it without conscious thought. That nuance is what artificial intelligence is now bringing to robots. AI-powered eyes use neural networks to process input in stages: detecting shape, categorizing it, and connecting that information with action. These models aren’t just classifying items like a database—they’re learning patterns, adapting to lighting conditions, and improving with feedback.

Some robots now come equipped with eye-like cameras that mimic human depth perception through the use of stereo vision or LiDAR fusion. AI then parses this information to navigate rooms, follow moving people, and avoid collisions—all without remote control. The key is not just identifying objects but reacting to them with purpose.

Real-Time Perception That Evolves

Where older robots paused between movements—waiting for commands or input updates—new models operate in fluid, real-time motion. AI-powered vision systems enable humanoid robots to predict the trajectory of a thrown ball or interpret a hand wave as a greeting, rather than simply recognizing background motion. These advances are powered by convolutional neural networks (CNNs) and transformers—tools that can manage large data flows from cameras and filter them intelligently.

One of the most groundbreaking shifts is adaptability. These robotic eyes don’t just recognize a person once—they track changes over time. For example, a robot working in a warehouse can distinguish between a pallet, a moving worker, and a shadow. More impressively, it can update its path if the worker steps in front of it, instead of freezing in place. This kind of decision-making is where robotic perception meets autonomy.

Facial recognition also plays a role here, particularly in service robots and caregiving robots. An AI-powered humanoid robot can now detect not only who someone is, but also their emotional state. That capability changes how robots interact. If someone appears confused, the robot might offer assistance. If they look angry, it might avoid interaction entirely. The line between passive observation and social response is becoming thinner.

Applications in the Real World

The shift to AI-powered vision isn’t happening in isolation—it’s affecting sectors across the board. In manufacturing, humanoid robots equipped with these new eyes can inspect products for flaws that are invisible to the human eye. They can spot a misaligned label or a dent in metal faster than any person and without fatigue.

In healthcare, vision-enabled humanoids assist in rehabilitation, guiding patients through movements and ensuring proper posture in real-time. Some research even explores using this technology to aid in remote diagnostics by observing patient behavior or gait.

Retail is also leaning into this trend. Stores are deploying humanoid greeters that can scan crowds, detect when someone looks lost or frustrated, and offer help before they even ask. In airports, AI-powered robots have begun to guide travelers by interpreting signs of confusion and walking them to gates or check-in counters. These use cases go far beyond gimmicks—they solve real problems at scale.

In education, a robot with intelligent visual input can act as a tutor, recognizing when a child is struggling with a problem or losing focus. It can offer tailored responses or re-engage them, which is something traditional machines couldn’t do.

What’s Next for Robotic Vision?

While we’re seeing massive leaps, AI-powered eyes are still in the process of evolving. One major hurdle is context. A robot might recognize a cup on a table, but does it understand that spilling it could damage nearby electronics? Can it judge whether to pick it up, leave it, or warn a human? True visual intelligence will come when robots understand not just what things are, but what they mean in the moment.

Another frontier is collaborative perception, where multiple robots share their perceptions. In future smart environments, your household robot could communicate with your home security drone, cleaning bot, and wearable devices, each contributing to a shared visual map. This isn’t science fiction. Research groups and startups are already testing multi-agent systems with pooled sensory input.

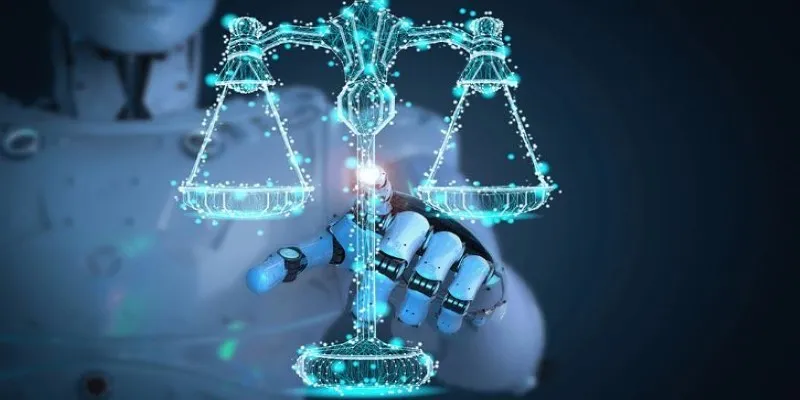

Privacy is a growing conversation. As humanoid robots become more visually capable, ethical questions follow. Who owns the data they see? Should they remember faces or forget after completing a task? How can we prevent the misuse of recognition technology in everyday machines? Regulation hasn’t yet caught up, but it will as these robots become mainstream.

Vision That Thinks—Not Just Sees

Robots with sight are no longer fiction—AI-powered vision is here. Machines now interpret scenes, learn, and adapt in real time, moving beyond simple reactions. This new generation sees and thinks, making decisions and improving as they work, much like humans. We’re just starting to see their potential. Still, their ability to perceive and interact is set to transform how we live, work, and connect with technology in everyday environments, shaping a more responsive future.

zfn9

zfn9