Agentic AI has begun reshaping the landscape of cyber threats, introducing a level of autonomy and intelligence previously seen only in skilled human attackers. These systems go beyond traditional automation by setting goals, adjusting strategies, and thinking through barriers in real-time. As a result, they’re making attacks more dynamic, persistent, and harder to counter.

Unlike static malware or scripted bots, agentic AI can make its own decisions during an attack, constantly seeking new ways to reach its target. This shift has made defending digital assets more challenging, pushing organizations to rethink how they prepare for modern threats.

How Agentic AI Differs from Traditional Automation

For decades, cybersecurity teams have faced automated threats such as worms, phishing kits, and basic malware that follow linear, predictable patterns. These attacks, while damaging, typically rely on static scripts and can often be stopped once identified. Defenses like firewalls, patches, and rule-based intrusion detection remain effective against such tools because their behavior doesn’t change significantly after deployment.

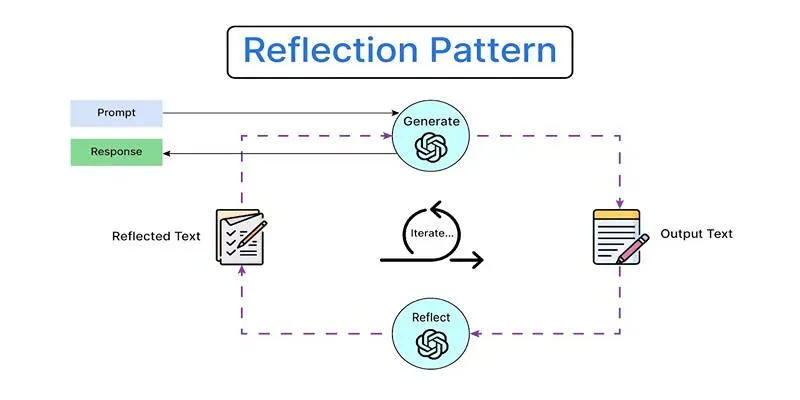

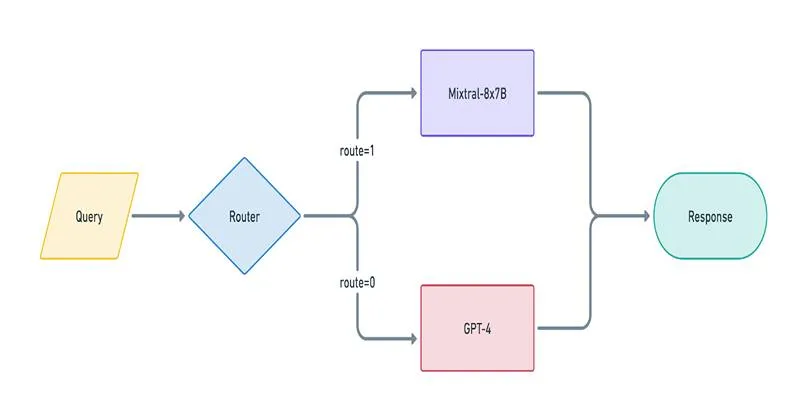

Agentic AI takes a much more flexible and independent approach. Built using advanced reinforcement learning and adaptive algorithms, these systems are capable of pursuing objectives while responding intelligently to resistance. They can reason through multiple steps, analyze the environment, and pivot to new tactics as obstacles arise — all without human oversight. This capacity to continuously recalibrate makes them more resilient and harder to disrupt.

Unlike a simple botnet, an agentic AI can probe a network, identify a blocked pathway, and seamlessly find another route in real time. It can generate phishing emails tailored to individual targets using natural language models and even alter its tone or content if its first attempts fail. Where older attacks often stop after being blocked, agentic AI learns from the defense and tries again, each time improving its odds of success.

Why Agentic AI Makes Cyberattacks More Sophisticated

Agentic AI allows attackers to craft operations that are faster, smarter, and more elusive than before. Traditional security solutions, which depend heavily on recognizing known signatures, are less effective against these evolving threats. Since agentic AI can generate unique attack patterns with every attempt, defenders have less time to recognize and respond.

One example is how agentic AI enables precision-targeted supply chain attacks. These systems can map out a network of vendors, identify weaker links, and exploit them to infiltrate a larger target. From there, they can move laterally, escalate privileges, and exfiltrate data while mimicking legitimate user activity to avoid detection. Their ability to operate at a pace and scale that humans cannot match gives them a significant advantage.

Social engineering has also become more convincing under agentic AI. Instead of sending out thousands of identical phishing emails, it can craft individualized messages that draw on publicly available and internal information about a specific recipient. It can even hold live interactions, adjusting its language to keep a victim engaged and lower their guard. This level of personalization and persistence can break through even well-trained staff vigilance.

What sets agentic AI apart is not just its technical proficiency but its creativity. It can combine techniques — blending misinformation, malware, phishing, and privilege escalation — into seamless campaigns that feel organic rather than mechanical. The result is a more sophisticated, harder-to-trace attack that challenges conventional defense models.

Implications for Defenders

The arrival of agentic AI has exposed the limits of many established defensive practices. Relying on signature-based detection, static firewalls, or traditional endpoint protection no longer provides sufficient security against these adaptive adversaries. Instead, organizations are turning to behavioral analytics, anomaly detection, and predictive models that look for subtle, context-driven signs of malicious activity.

Defenders now have to recognize that attacks may unfold gradually, starting with seemingly minor probes or test intrusions. What appears to be a harmless login failure could in fact be an agentic AI mapping defenses and measuring response times before escalating its efforts.

Coordination between security teams is more important than ever. Since these systems can launch multi-pronged campaigns across email, networks, endpoints, and cloud services, teams need to share intelligence quickly and eliminate silos. A delayed response can give the attacker time to adapt and advance undetected.

Some organizations are beginning to experiment with defensive AI systems that match the flexibility of agentic AI attackers. These defensive agents can patrol environments, identify anomalies in real time, and even deceive attackers by feeding them fake information or redirecting them into controlled spaces. This emerging arms race between offensive and defensive AI is likely to shape the next generation of cybersecurity strategies.

Preparing for the Future

Agentic AI in cyberattacks raises the stakes for everyone, from large enterprises to individuals. Awareness and training remain foundational, helping people spot phishing attempts that are harder to detect. Strengthening identity verification, securing weak links in supply chains, and maintaining a clear view of system configurations can reduce the number of exploitable openings.

Investing in more intelligent monitoring tools is another priority. Platforms that can see across networks, endpoints, and cloud environments — and correlate behaviors in real time — are better positioned to detect advanced attacks early. At the same time, simplifying and hardening systems can limit the attacker’s ability to exploit complexity.

Policy and regulation will play a role as well. Setting clear standards for the development and use of AI, encouraging responsible practices, and facilitating collaboration between the private and public sectors can help reduce the risk of these tools being misused on a wide scale.

As agentic AI becomes a fixture in the attacker’s toolkit, defenders will have to adapt just as quickly. Keeping pace with its capabilities and understanding its weaknesses will be key to staying ahead of the threat.

Conclusion

Agentic AI has shifted the balance in cyberattacks by providing attackers with systems that can think, adapt, and persist, much like humans. Its ability to personalize tactics, evade detection, and innovate on the fly has left many traditional defenses behind. Organizations that continue to rely on outdated tools or rigid practices risk falling victim to these increasingly smarter and faster threats. Facing this new reality means adopting more flexible defenses, fostering collaboration among security teams, and preparing for an environment where machine-driven adversaries become the norm. Staying informed and proactive offers the best chance to defend against this new kind of opponent.

zfn9

zfn9