At GTC 2025, Nvidia didn’t just focus on accelerating neural networks or scaling GPUs. The spotlight was on something more tangible: transforming your next hospital visit. While healthcare isn’t new to Nvidia, this year’s announcements were sharper and more physical. Medical scans are about to change dramatically. Robotic arms and AI-driven decision engines are transitioning from lab demos to practical tools. They can read X-rays, guide ultrasound wands, and suggest next steps—all in real-time.

Nvidia’s keynote provided healthcare workers and hospitals with insights into how it plans to combine its chips, software, and now even robotic partners to make diagnostics faster, more accurate, and less reliant on overworked radiologists. This isn’t just a rebranding of existing hardware. Nvidia is integrating AI with robotics to read, react, and move—right at the patient’s bedside.

From GPU to ICU: Why Healthcare Needs AI + Robotics

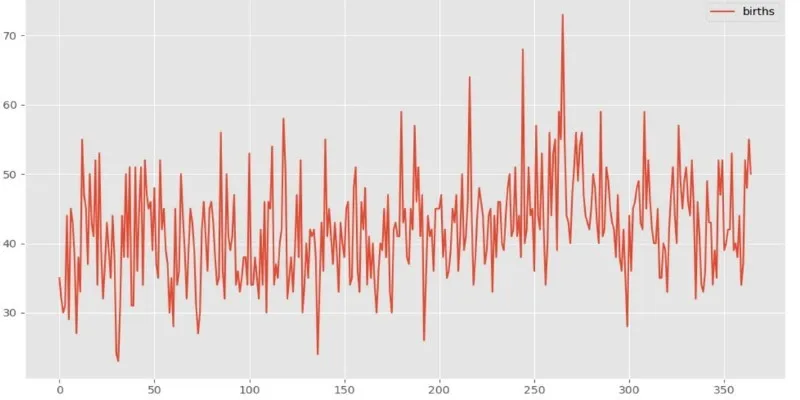

Hospitals are data-heavy environments, yet much of this data remains siloed, often accessed only post-crisis. Nvidia’s vision is to leverage AI to preemptively address these timelines. Medical imaging—like MRIs, CT scans, X-rays, and ultrasounds—produces vast visual data, often requiring hours to interpret. With Nvidia’s new hardware-software stack, much of this interpretation could become instantaneous.

A key platform unveiled was an update to Clara Holoscan, Nvidia’s edge AI computing system tailored for surgical and diagnostic tools. The real shift wasn’t just in processing but also in the physical presence. Nvidia, in collaboration with robotic system manufacturers, demonstrated robot-guided scanning systems powered by Clara and Jetson. These units don’t replace human operators but assist them by aligning scanners precisely and suggesting scan angles based on learned imaging patterns.

In essence, it’s not just about training AI models to interpret lung shadows. It’s about enabling AI and robots to assist in generating the scan more intelligently. Nvidia claims this approach reduces rescans, speeds up workflows, and equips rural hospitals with tools typically reserved for well-funded research clinics.

What Nvidia Is Actually Building (And Why It’s Not Hype)

GTC 2025 didn’t shy away from specifics. A standout demo featured an AI-guided robotic ultrasound platform. Nvidia’s Jetson Orin modules handled real-time video processing, comparing live feeds to thousands of anonymized scans and flagging anomalies during the scan. While it didn’t diagnose, it highlighted areas for radiologists to revisit—something they often wish for more time to do.

Nvidia’s updated Clara Guardian suite, already in use for hospital monitoring and AI diagnostics, now extends into mobile robotics. Small autonomous carts equipped with sensors and Clara-based inference systems were shown scanning patient QR tags, confirming treatment routines, and relaying data to backend EMRs with minimal human input. While this may seem akin to warehouse logistics, in overburdened clinics where staff manage numerous cases per shift, automating routine checks can enhance attention to critical patients.

Partnerships, Platforms, and Practical Obstacles

Even with the hardware ready, adoption doesn’t occur in isolation. Nvidia’s push includes partnerships with hospitals, med-tech firms, and academia. Collaborations with Siemens Healthineers and GE Healthcare are already underway. Some GTC panels featured physicians discussing how AI models can help flag mistakes or reduce false positives in time-sensitive diagnoses like strokes or internal bleeding.

Nevertheless, challenges persist. Regulatory compliance is a significant hurdle. Any device interacting physically with patients must meet stringent safety standards. AI systems that assist rather than replace human readers can be approved more swiftly but still require validation trials. Nvidia seems to favor a hybrid approach: human-in-the-loop systems that build trust gradually while increasing autonomy.

Infrastructure is another challenge. Not every hospital has the bandwidth or hardware to run advanced AI workloads locally. Nvidia’s response involves edge devices like Holoscan and Jetson, which don’t require a full data center but still offer robust inference capabilities. Even rural or mid-size clinics could deploy them, with models updating over time via secure cloud links.

A Future That Moves and Thinks at the Same Time

GTC 2025 highlighted that Nvidia is advancing beyond faster chips to systems capable of seeing, moving, and deciding together. Healthcare, with its overworked staff, rising demand, and costly delays, stands to benefit immensely. Nvidia aims to support, not replace, healthcare professionals with AI that spot missed strokes, robots that stabilize scanners, and workflows that react in real time. These powerful, practical tools are not just futuristic promises—they are available now. Whether they become as ubiquitous as a stethoscope will depend on regulation, affordability, infrastructure, and clinician trust. What’s evident is that AI in healthcare is moving from the back office into scan rooms, ERs, and even ambulances—working alongside people to deliver better, faster care precisely when and where it’s needed most.

zfn9

zfn9