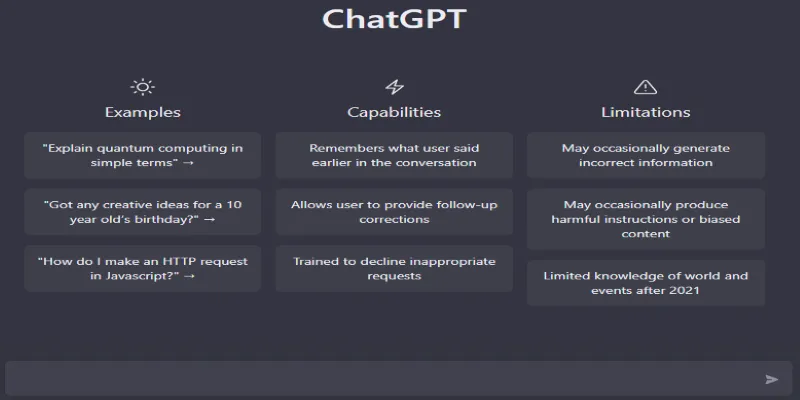

If you’ve ever asked ChatGPT a lengthy question and noticed it stopped mid- thought, you’re not imagining it. There’s a very real reason this happens, and it has to do with how ChatGPT is built. Like any tool, it comes with boundaries—character limits, word count caps, and processing thresholds. But how do these limits actually work, and how do they affect what you see on your screen? Let’s break that down in simple terms.

Do ChatGPT Responses Have a Character or Word Limit?

It’s All About Tokens, Not Words

First things first—ChatGPT doesn’t measure things in words the way we do. It works with something called tokens. A token can be a word, part of a word, or even punctuation. For example, “cat” is one token, but so is “running,” which might get split into two tokens like “runn” and “ing.” The same goes for numbers, symbols, and dashes. This means the number of tokens isn’t always the same as the number of words.

So when someone says ChatGPT has a token limit, they’re talking about the total pieces of a conversation—including your question, the model’s answer, and everything before that. If the whole thing starts pushing that token limit, the response gets cut short, even if the model has more to say.

What’s the Actual Limit Then?

This depends on which version of ChatGPT you’re using. The newer ones can handle more tokens, which helps make conversations longer and answers more detailed. But even the most advanced versions still have a cap. Think of it as a maximum storage box—once it fills up, there’s no room for more.

Let’s say you’re using GPT-4-turbo. It can usually work with up to 128,000 tokens in one go. That’s a lot—roughly 300 pages of text. But this includes everything: your current question, all the back-and-forth history, and the answer it’s generating. If your conversation is short, ChatGPT can use most of that space to reply. If it’s long, the room it has to respond starts shrinking.

Now, here’s the catch: ChatGPT often cuts its responses way before reaching the full token limit. This is because OpenAI sets a default output limit, even if the model could technically say more. It’s a balance between giving you a quick answer and keeping things efficient on the backend.

Do These Limits Change Over Time?

They do. Each new version of ChatGPT usually comes with upgrades—more memory, higher token limits, and faster responses. So what might have felt like a constraint in an older version could now feel smoother.

That said, there’s always going to be a boundary. Even with 128,000 tokens, the model has to prioritize what matters in a conversation. It decides what to keep, what to trim, and how long it can afford to speak. And it’s doing that every time you hit “send.”

Can You Control the Limit Yourself?

Not directly, but there are a few ways to guide how long or short you want the answer to be. For instance, you can say “explain briefly” or “give a detailed explanation,” and it’ll do its best to match that. If you’re using the API (that’s what the backend tech developers use), you can actually set a max_tokens value to control how long the response should take.

That said, even this isn’t a guarantee of precision. Since tokens vary in size, saying “give me 100 tokens” won’t always mean 100 words. You’ll get a rough idea of length but not an exact word count.

Why Some Responses Just Stop

It’s a little frustrating, right? You ask a long, thoughtful question, and the model gives you a halfway answer. No warning, no “to be continued.” Just silence. That’s usually a sign it hit its output token cap. Even if it wasn’t done explaining, it had to stop.

The solution? You can ask it to “continue” or “go on,” and it’ll pick up from where it left off. You won’t lose anything—it just needs a nudge. Another trick is to break big questions into smaller parts. This gives ChatGPT more room to reply clearly without getting boxed in.

How These Limits Show Up in Real Use

Let’s talk about examples. You’re writing a blog post and asking ChatGPT to draft a full-length article. It starts great, but ends halfway through a sentence. That’s the output token cap at work. It didn’t run out of ideas—it just hit the wall. You ask it to continue, and it does without missing a beat. That’s because it still remembers where it left off.

Another scenario: you’re asking for help with a complex problem, and the model gives you a short answer that barely scratches the surface. In this case, the model may have been conservative, saving token space in case it needed to give more context later. If you reply asking for a deeper explanation, it usually opens up and offers more. It’s all about how much room the model thinks it has and how much of that room it wants to use.

Wrapping It Up!

Yes, but not in the way we usually think. It’s not a simple word count or a fixed length. It’s a flexible space built around tokens, shaped by how long your conversation is and how detailed your question might be. The model works inside that space, deciding what to say and when to stop.

When it cuts off, it’s not being rude or forgetting—it just hits its limit. A quick follow-up usually brings the rest. And as newer versions roll out, these limits are stretching further, giving more room for better conversations. Think of it less like a wall and more like a sandbox—it’s big but not endless. How much of it you use is up to you.

zfn9

zfn9