It’s not often that the world’s top competitors in technology sit at the same table, let alone collaborate on something monumental. But here we are. In a move sending ripples across the AI industry, OpenAI, Google, Microsoft, and Anthropic have joined forces for a shared mission: ensuring artificial intelligence is safe. Not just efficient or scalable, but safe—measurable, controlled, and with clear guardrails. When companies that usually race each other to break new frontiers start marching in step, it loudly signifies a new AI era where stakes transcend the technical and verge on the existential.

Why Are These Giants Collaborating Now?

The timing of this alliance isn’t random. AI is evolving at a pace that even the most advanced policy groups and watchdog organizations struggle to keep up with. Just over the past two years, we’ve seen language models leap from generating basic responses to crafting legal memos, writing software, interpreting medical data, and guiding robotics. This impressive growth has also sparked unease. Left unchecked, these systems could be misused, misunderstood, or mishandled, potentially leading to significant consequences. That’s not just theoretical anymore.

So, OpenAI, Google, Microsoft, and Anthropic have decided to act preemptively. These companies are building some of the most advanced AI systems globally. By uniting, they’re not just drafting a rulebook—they’re signaling that no single player should have unchecked power when developing transformative technologies. Each brings unique assets: OpenAI’s research-first philosophy, Google’s deep infrastructure, Microsoft’s cloud-based reach, and Anthropic’s focus on constitutional AI. Together, this consortium is creating shared safety benchmarks, encouraging open communication, and aiming to lead by example in responsible AI scaling.

The formation of this partnership sends a powerful message to global regulators and policymakers: “We’re not waiting for laws to catch up. We’re taking the initiative now.” These companies understand that if they don’t help shape the future of AI safety, someone else will—possibly with less insight, less coordination, or less interest in global stability.

Core Goals of the Alliance

The alliance between OpenAI, Google, Microsoft, and Anthropic has sharp, actionable goals. At its center is a push for what’s now being called frontier AI safety. This term refers to advanced AI systems capable of actions or decisions that affect the world beyond just software environments—tools that interact with real people, real institutions, and sometimes, real consequences.

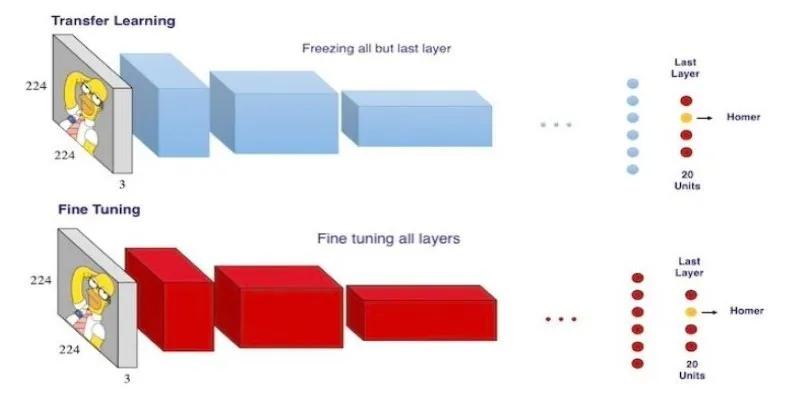

The tech giants are aligning on practices like adversarial testing, red-teaming models, and designing systems that remain interpretable even at scale. One of the main challenges with current AI systems is the black-box problem—AI does something impressive or unexpected, and nobody knows exactly why. That lack of visibility makes trust difficult. This coalition aims to fix that by developing tools and benchmarks that reveal how models arrive at decisions.

They’re also leaning heavily into third-party evaluations. Instead of saying, “Trust our results,” they’re opening the door to academic researchers, auditors, and independent labs that can assess claims, test limits, and report vulnerabilities. This transparency is rare in a space dominated by secrecy and intellectual property, but it’s necessary. The stakes are too high to operate behind closed doors.

Furthermore, the group is focusing on information sharing. When one company discovers a vulnerability or a dangerous emergent behavior, they’ll communicate it across the board. This creates a kind of early-warning system for AI issues—one that works across companies, not in silos. For the first time, safety might scale with innovation rather than trail behind it.

Changing the Industry Dynamic

The partnership among these four players is more than a symbolic handshake—it shifts how the AI race is run. Until now, AI development has resembled an arms race: who can build bigger models, faster infrastructure, and better data pipelines? But with this alliance, safety has become a performance metric. If you can’t show your model is secure, accountable, and controllable, you’re not just behind—you’re irrelevant.

This pressures smaller startups and AI labs to rethink their priorities. Cutting-edge performance isn’t enough. There’s now a shared expectation of responsible scaling and safety testing. And since these standards come from the top, they’ll likely become the norm. Venture capitalists are paying attention, too, with more funding going to AI startups that include risk mitigation and transparency from the start.

At the same time, the coalition raises a big question: What about open-source AI? Developers outside big platforms often lack resources for rigorous safety testing. If frontier models grow more powerful and only large players can safely audit them, we could see a divide between corporate and public innovation. Balancing openness with safety will continue to be a challenge.

Tech giants are also walking a fine line on antitrust. Cooperation on safety sounds noble—and in many ways, it is—but regulators will be watching. Too much consolidation, even for safety reasons, could hinder competition. For now, though, the alliance strikes the right tone: collaboration for stability, not dominance.

Future Implications for AI

This collaboration marks a turning point for artificial intelligence. In the past, industry standards often followed disruption—think GDPR for privacy or safety rules after industrial mishaps. This time, tech leaders are trying something new: building the safety net before the fall. That changes AI’s trajectory. It means that safe design is being built into every product, every release, and every roadmap.

The effect on users and developers will be significant. AI tools are becoming more embedded in daily life—healthcare diagnostics, finance modeling, education, and autonomous systems. A shared foundation for responsible design helps ensure innovation doesn’t compromise reliability. As models grow more capable, the need for shared safety standards will only increase.

It also opens the door for international coordination. Suppose OpenAI, Google, Microsoft, and Anthropic can work together in one of the most competitive sectors. In that case, governments, civil society groups, and research labs might also collaborate to build a global safety ecosystem. That’s not just optimistic—it might be necessary.

Conclusion

AI is moving fast, but this moment shows that speed doesn’t have to come at the cost of responsibility. With OpenAI, Google, Microsoft, and Anthropic collaborating, there is a renewed sense of direction in a space that has often felt like a runaway train. This isn’t a cure-all—there will still be challenges, loopholes, and ethical gray areas. But it’s a serious start. When the giants begin walking the same path, the rest of the industry is more likely to follow. Safe AI isn’t a footnote anymore—it’s the roadmap.

For more on AI safety and developments, explore OpenAI’s blog or Google AI.

zfn9

zfn9