Artificial Intelligence (AI) has significantly evolved, moving beyond text to include diverse inputs like images and audio. At the forefront of this evolution is Multimodal Retrieval-Augmented Generation (RAG). This system enables AI to comprehend, retrieve, and generate content using both text and images.

Thanks to Google’s Gemini models , developers now have free access to powerful tools for building such systems. This post breaks down how to build a Multimodal RAG pipeline using Gemini’s open AI infrastructure. By the end, you’ll understand the core concepts and how to implement your own image + text query system.

What is Multimodal RAG?

To understand Multimodal RAG, this post breaks it into two parts:

Retrieval-Augmented Generation (RAG)

RAG enhances the capability of language models by integrating external information retrieval into the response generation process. Traditional language models rely solely on their training data. RAG overcomes this by searching documents for relevant information during runtime, making responses more accurate and context-aware.

Multimodal Systems

A multimodal system processes more than one type of input—like images and text together. When combined with RAG, this results in an AI that can take, say, an image and a question, search a knowledge base, and respond with contextual understanding.

Why Use Gemini?

Google’s Gemini models are part of their Generative AI suite and support both text-based and vision-based tasks. The biggest advantage? They’re available at no cost, making them ideal for developers looking to build high-performance systems without infrastructure investment.

Gemini provides:

- Text models for conversational and contextual understanding

- Vision models to analyze and interpret images

- Embeddings for converting text into searchable vector formats

It enables you to build Multimodal RAG systems entirely for free, which until recently required costly API access.

Tools and Models Used

To build this system, you will use the following components:

- Google Gemini Pro : For text generation

- Gemini Pro Vision : For image understanding

- LangChain : To manage chains and prompts

- FAISS : For similarity search via vector indexing

- Google Generative AI Embeddings : To embed text for retrieval

Step-by-Step Implementation

Step 1: Environment Setup

First, install the packages you’ll need:

!pip install -U langchain google-generativeai faiss-cpu

This ensures you have access to LangChain’s utilities, FAISS for retrieval, and Gemini for generation.

Step 2: Configure Gemini API

You’ll need an API key from Google AI Studio. Once you have it, configure the key like this:

import google.generativeai as genai

import os

api_key = “your_api_key_here” # Replace with your actual key

os.environ[“GOOGLE_API_KEY”] = api_key

genai.configure(api_key=api_key)

This will give you access to both Gemini Pro and Vision models.

Step 3: Load and Process Text Data

Let’s assume you have a text file named bird_info.txt containing factual content about different birds.

We’ll load this file and break it into smaller parts for better retrieval.

from langchain.document_loaders import TextLoader

from langchain.schema import Document

loader = TextLoader(“bird_info.txt”)

raw_text = loader.load()[0].page_content

Manual splitting without built-in functions for originality

def manual_chunk(text, size=120, overlap=30):

segments = []

start = 0

while start < len(text):

end = start + size

chunk = text[start:end]

segments.append(Document(page_content=chunk.strip()))

start += size - overlap

return segments

documents = manual_chunk(raw_text)

This method splits long content into overlapping chunks for better semantic indexing.

Step 4: Generate and Store Embeddings

Now let’s convert those chunks into vector representations for similarity search.

from langchain.embeddings import GoogleGenerativeAIEmbeddings

from langchain.vectorstores import FAISS

embedding_model = GoogleGenerativeAIEmbeddings(model=“models/embedding-001”)

index = FAISS.from_documents(documents, embedding=embedding_model)

This retriever will help us find similar text chunks based on a query

text_retriever = index.as_retriever()

This process prepares the system to retrieve meaningful text snippets when the user asks a question.

Step 5: Create the Prompt and RAG Chain

Now build the part that combines retrieved text with the user query and forwards it to the Gemini text model.

from langchain.prompts import PromptTemplate

from langchain.chains import RetrievalQA

prompt = PromptTemplate(

input_variables=[“context”, “question”],

template="""

Use the context below to answer the question.

Context:

{context}

Question:

{question}

Answer clearly and concisely.

"""

)

rag_chain = RetrievalQA.from_chain_type(

retriever=text_retriever,

chain_type_kwargs={“prompt”: prompt},

llm=genai.GenerativeModel(“gemini-1.0-pro”)

)

This chain enables the system to return responses enriched with factual knowledge from the provided document.

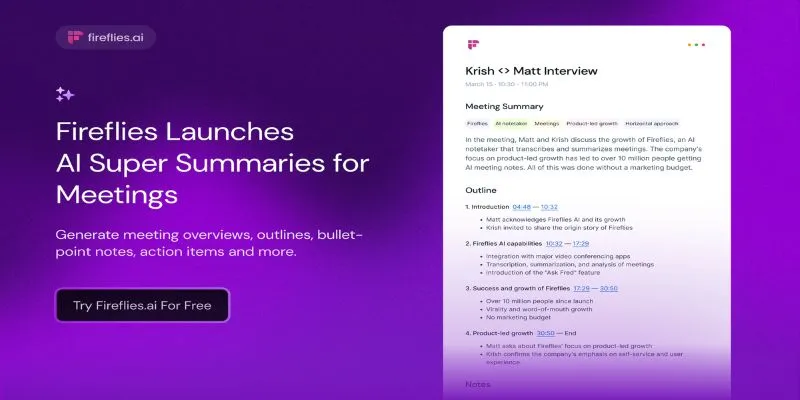

Step 6: Process Images Using Gemini Vision

To make the system multimodal, it needs to interpret images too. We’ll use Gemini Pro Vision for this.

from google.generativeai.types import Part

def image_to_text(image_path, prompt):

with open(image_path, “rb”) as img_file:

image_data = img_file.read()

vision_model = genai.GenerativeModel(“gemini-pro-vision”)

response = vision_model.generate_content([

Part.from_data(data=image_data, mime_type=“image/jpeg”),

prompt

])

return response.text

This function sends the image and accompanying prompt to Gemini’s vision model and returns a textual interpretation.

Step 7: Connect Image and Text Pipeline

Now, let’s create a function that combines everything—analyzing the image and generating a final answer using the RAG system.

def multimodal_query(image_path, user_question):

Step 1: Describe the image

visual_description = image_to_text(image_path, “What does this image represent?”)

Step 2: Combine with the user query

enriched_query = f"{user_question} (Image description: {visual_description})"

Step 3: Get an answer from RAG

final_answer = rag_chain.run(enriched_query)

return final_answer

To use it, run:

response = multimodal_query(“eagle.jpg”, “Where is this bird commonly found?”)

print(response)

This call analyzes the image, merges that with your text question, searches your knowledge base, and gives a tailored, accurate answer.

Key Concepts Behind the Build

Here are the foundational ideas that power this system:

- Text Embedding: Converts text into vector format for comparison. It allows semantic similarity search—not just keyword matching.

- Document Chunking: Breaking down large files into smaller parts improves retrieval quality and performance.

- Vision-to-Text: The image model turns visual data into text, allowing integration with a text-based retrieval system.

- Vector Search: Using FAISS, it stores and searches against high-dimensional embeddings quickly and effectively.

Conclusion

Multimodal RAG systems represent a massive leap in how to build intelligent tools. By integrating image understanding and text-based retrieval, you can build experiences that go beyond chatbots and into the realm of truly smart assistants. Thanks to Google’s Gemini, all of this is now completely accessible to developers, learners, and innovators—at no cost. This guide gave you the foundational steps to build a simple multimodal RAG pipeline with original code.

zfn9

zfn9