Many experts fear AI will surpass human intelligence, potentially forming a world where it leads from the front, becoming a superior entity. While these fears may seem legitimate, if AI continues to evolve, it could indeed learn and adapt beyond any intelligent being. This is where Explainable AI (XAI) becomes crucial. It involves processes and methods that allow users to comprehend how machine learning algorithms achieve results.

Explainable AI bridges the gap between AI and humans, fostering trust and reliance on AI systems. It aids in understanding AI models, their expected results, and potential biases. This understanding is vital for ensuring accuracy, transparency, and fairness in AI decision-making, especially when deploying AI models into production. Let’s delve deeper into the world of explainable AI with this guide!

Understanding Explainable AI

Explainable AI helps organizations adopt a responsible approach to AI development. As AI advances, the challenge of understanding how AI algorithms reach specific results grows. This process is often termed a ‘Black Box,’ where the workings are opaque. These Black Boxes are formed using data that an AI processes for calculations.

Even the engineers and data scientists behind these algorithms can struggle to explain how AI arrives at particular outcomes. Explainable AI addresses this by clarifying how certain outputs are achieved, ensuring developers that systems function as expected. This transparency is essential for meeting regulatory standards and assessing the impact of AI decisions.

How Does Explainable AI Work?

Today’s AI models are designed to handle vast data, featuring flexibility and limited built-in controls. They follow programmed rules and instructions, necessitating precise mathematical and logical reasoning from engineers. Explainable AI incorporates tools and techniques to elucidate AI model decisions, embedding transparency and trust within AI systems.

In essence, Explainable AI employs methods to trace decision-making processes within machine learning (ML), ensuring clarity and accountability.

Explainable AI Techniques

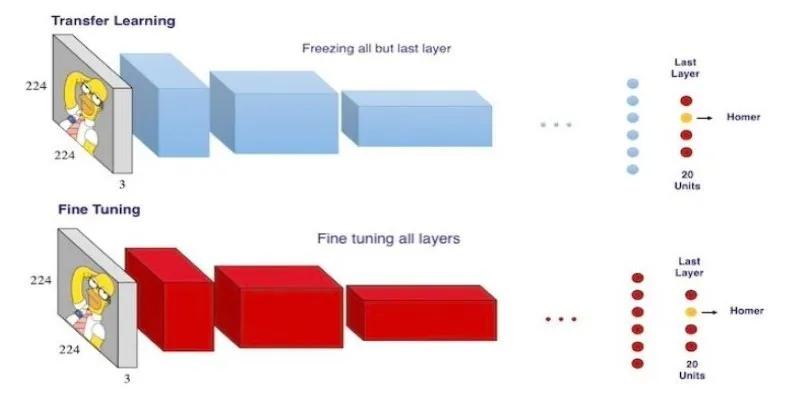

Explainable AI operates on three core techniques: prediction accuracy, traceability, and decision understanding. These techniques address technological and human needs:

- Prediction Accuracy: This determines AI’s effectiveness in operations. It utilizes simulations with training datasets to verify accuracy, often employing LIME (Local Interpretable Model-Agnostic Explanations) to elucidate ML predictions.

- Traceability: This technique limits decision-making pathways, offering a narrower scope for ML rules. DeepLIFT (Deep Learning Important Features) is a common method, comparing neuron activations to establish traceable links.

- Understanding Decision: This fosters trust between humans and AI by educating organizational teams on AI decision-making processes.

Use Cases for Explainable AI

Here are some practical applications of Explainable AI:

- Healthcare: Enhances diagnostic accuracy, image analysis, resource optimization, and medical diagnostics, while improving pharmaceutical approval processes.

- Financial Services: Manages risk assessments in credit and wealth management, ensuring transparency in loan approvals and improving customer experience.

- Criminal Justice: Optimizes DNA analysis, crime forecasting, and prison population analysis, while detecting biases in training data algorithms.

Benefits of Explainable AI

Explore the notable benefits of Explainable AI:

- Facilitates the rapid deployment of trustworthy AI models, ensuring better interpretation, transparency, and traceability.

- Enables systematic monitoring and management of AI models for improved evaluation and business outcomes.

- Enhances regulatory compliance and risk management, reducing costly errors through transparent AI models.

Explainable AI Relatability to Responsible AI

Explainable AI and Responsible AI aim to enhance AI understanding but differ in their approaches:

- Explainable AI: Focuses on interpreting results post-computation.

- Responsible AI: Emphasizes oversight during the planning stage, promoting responsible algorithm development.

These models can synergize to foster a deeper understanding and achieve superior outcomes.

Conclusion

Explainable AI is pivotal in understanding machine learning, building trust, and managing human-machine collaboration. It has the potential to revolutionize sectors such as healthcare, finance, environment, and defense, paving the way for autonomous systems characterized by accuracy, transparency, and traceability.

zfn9

zfn9