The buzz around large language models isn’t slowing down, and rightly so. These models are becoming smarter, faster, and—when trained correctly—amazingly good at understanding context. One approach that’s gaining traction is Reinforcement Learning from Human Feedback (RLHF). If you’ve been considering how to fine-tune Meta’s LLaMA model using RLHF, you’re in good company. While it might initially seem complex, breaking it down makes the process much more approachable. Let’s explore how to train LLaMA with RLHF using StackLLaMA, without feeling like you’re solving a puzzle missing pieces.

What Makes StackLLaMA Worth Your Attention?

Before diving into the training steps, it’s beneficial to understand what StackLLaMA offers. Designed to streamline the RLHF process, StackLLaMA integrates essential components for human feedback training into a cohesive workflow. You’re not left piecing together random scripts or juggling multiple libraries that weren’t designed to work together.

Here’s what StackLLaMA manages:

- Supervised Fine-Tuning (SFT): Teaches the model initial behavior using a human-annotated dataset.

- Reward Model Training: Scores responses so the model understands what “better” looks like.

- Proximal Policy Optimization (PPO): Reinforces good behavior through trial and feedback.

The real advantage? StackLLaMA keeps everything connected, eliminating the need to manually glue components together.

StackLLaMA: A Hands-On Guide to Train LLaMA with RLHF

Step 1: Set Up Your Environment

You can’t train without the right setup. Ensure your hardware is capable—ideally, A100s or multiple high-memory GPUs for smooth runs. For local development or small-scale experiments, a couple of 24GB VRAM cards might suffice, but be prepared to reduce batch sizes.

Dependencies You’ll Need:

- PyTorch (CUDA version)

- Transformers from HuggingFace

- Accelerate (for distributed training)

- trl (Transformers Reinforcement Learning)

- peft (for efficient fine-tuning)

- Datasets (for dataset handling)

- BitsAndBytes (for 4-bit quantization if memory is limited)

Once installed, clone the StackLLaMA repository and configure the environment using the provided YAML or requirements.txt.

Step 2: Run Supervised Fine-Tuning (SFT)

This is where LLaMA gets its first taste of structured instruction-following. Think of SFT as providing the model with a baseline that teaches the basics of proper response formatting.

What You’ll Need:

- A cleaned, instruction-based dataset. Alpaca, OpenAssistant, or your curated set can work.

- A base LLaMA model checkpoint. The 7B variant is typically a good balance of performance and resource requirements.

- Tokenizer from the same checkpoint.

Format your training data into prompt-response pairs. StackLLaMA uses the Transformers Trainer, so this part will feel familiar if you’ve used HuggingFace’s ecosystem before. Ensure consistent padding and truncation, and tokenize both prompts and responses correctly.

Command-line training might look like this:

accelerate launch train_sft.py \

--model_name_or_path ./llama-7b \

--dataset_path ./data/instructions.json \

--output_dir ./sft-output \

--per_device_train_batch_size 2 \

--gradient_accumulation_steps 8

By the end of this phase, you’ll have a model that follows instructions reasonably well but hasn’t learned to prioritize better answers over average ones.

Step 3: Train the Reward Model

Here comes the judgment part.

The reward model isn’t a separate base—it’s another instance of LLaMA fine-tuned to evaluate responses. You’ll feed it paired responses to the same prompt: one “preferred,” one “less preferred.” The model’s job is to score higher for the better response.

Dataset Preparation:

- Structure it into prompt + (chosen, rejected) pairs.

- Use a simple preference label (e.g., 1 for preferred, 0 for not).

Tokenization is crucial here. Both responses need to be paired with the same prompt. The reward head is usually a linear layer on top of LLaMA’s hidden states, predicting scalar scores for ranking.

Training runs similarly to SFT, with a different script:

accelerate launch train_reward_model.py \

--model_name_or_path ./sft-output \

--dataset_path ./data/preference_pairs.json \

--output_dir ./reward-model \

--per_device_train_batch_size 1 \

--gradient_accumulation_steps 4

Now your reward model knows what counts as a better answer. Next, you’ll use it to push the base model to aim higher.

Step 4: Reinforcement Training with PPO

This is where everything ties together.

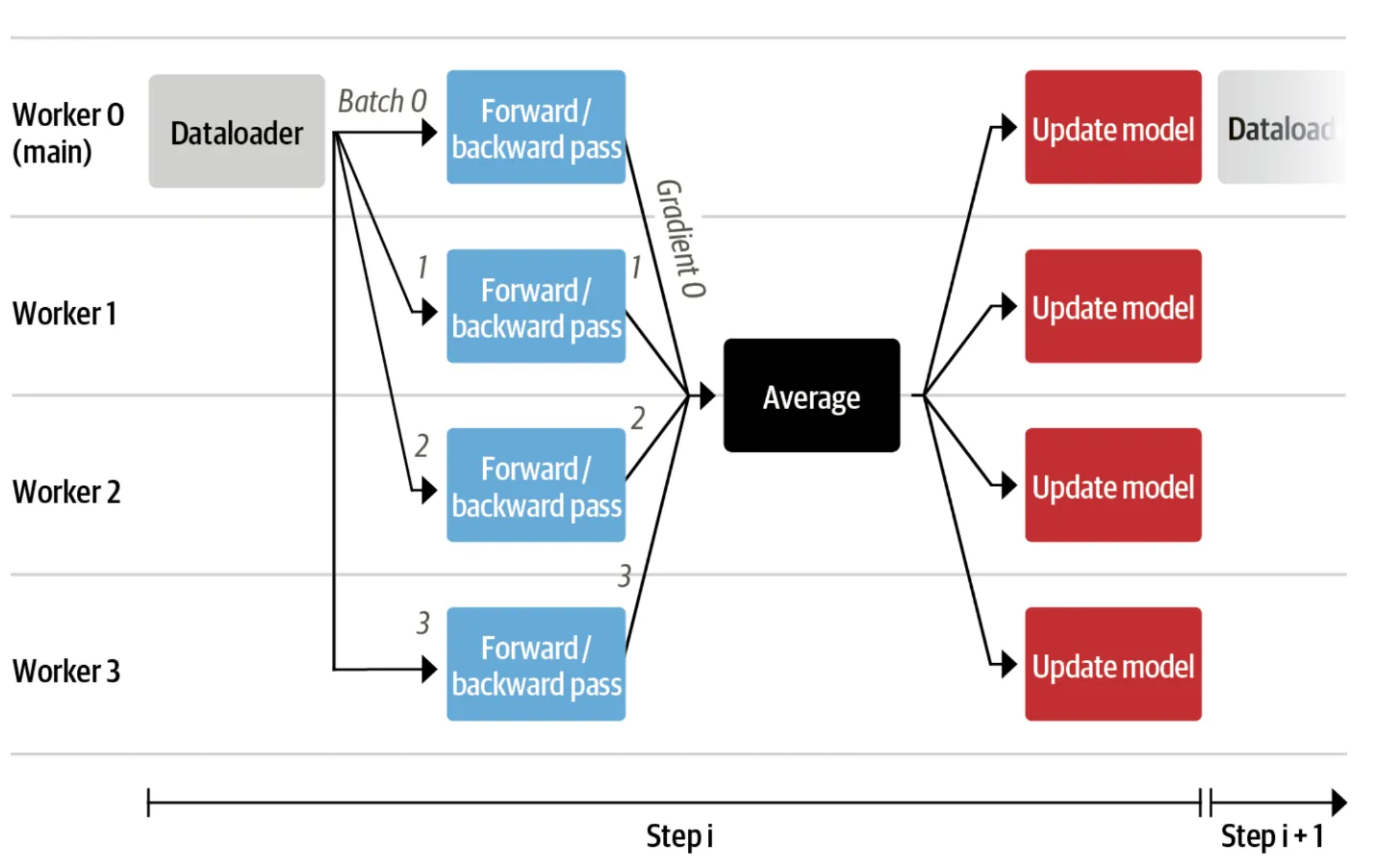

StackLLaMA employs PPO (Proximal Policy Optimization) from HuggingFace’s trl library. The PPO loop involves:

- Sampling responses from the current policy (your SFT-trained model).

- Scoring them using the reward model.

- Calculating the advantage and updating the model to favor better outcomes.

This process isn’t about labeling anymore—it’s about feedback. Responses are scored, and the model is nudged towards those with higher rewards.

Key Arguments:

- Use a frozen copy of the reward model to maintain consistent scoring.

- Keep the reference SFT model frozen, so PPO can measure change from the original behavior.

Here’s a simplified launch command:

accelerate launch train_ppo.py \

--model_name_or_path ./sft-output \

--reward_model_path ./reward-model \

--output_dir ./ppo-output \

--per_device_train_batch_size 1 \

--ppo_epochs 4

Monitor stability closely. PPO can become unstable with high learning rates or large KL penalties. Small batches, frequent evaluations, and gradient clipping are your allies.

Wrapping Up

Training LLaMA with RLHF used to sound like something reserved for big labs with unlimited resources. StackLLaMA changes that. It simplifies the process, connects the dots across SFT, reward modeling, and reinforcement tuning, and allows you to genuinely understand the process rather than endlessly debugging trainer configurations.

Once you’ve gone through all four phases—SFT, reward training, PPO, and evaluation—you’ll have a model that doesn’t just follow instructions but chooses smarter responses. And you did it without reinventing the wheel or patching together half-documented GitHub projects. That’s a solid win.

zfn9

zfn9