AI detection tools have grown in popularity alongside the rise of generative language models. These tools claim to identify whether a piece of content was written by a human or generated by artificial intelligence. However, as their use becomes more widespread in education, publishing, and professional settings, serious concerns have emerged about their reliability.

Below are five examples that suggest current AI checkers may be prone to errors—even when dealing with well-known historical texts and modern human- written materials.

Example 1: AI-Generated Text Flagged as Human-Written

One of the key promises of AI detectors is the ability to identify machine- generated content. However, tests conducted with well-known tools like ZeroGPT have revealed inconsistent results. In one instance, a short paragraph generated by an AI chatbot about a modern smartphone was analyzed by the detector. The result: it was flagged as 100% human-written , despite being created entirely by an AI model.

This raises questions about the tools’ accuracy and highlights a critical flaw—AI-written content can appear indistinguishable from human writing , particularly when prompts are crafted carefully.

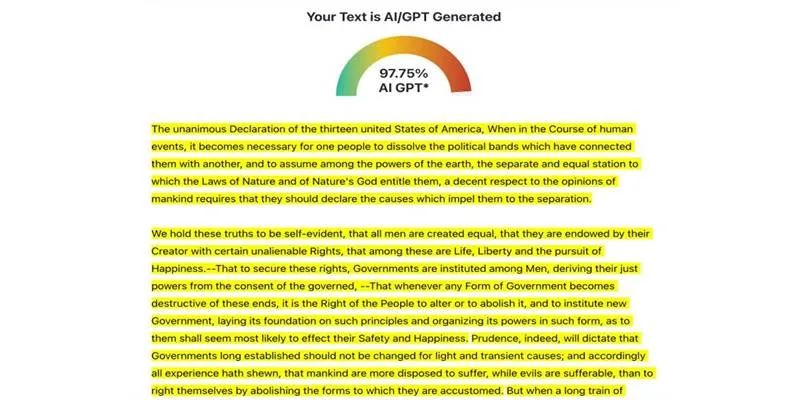

Example 2: The US Declaration of Independence Detected as AI

The Declaration of Independence, a foundational document of the United States written in 1776, was submitted to an AI detection tool for analysis. The tool responded by identifying 97.75% of the content as AI-generated.

Given the historical origin of the document, this classification appears to be incorrect. While the writing style may contain patterns similar to modern machine-generated text, the authorship clearly pre-dates artificial intelligence by centuries. This result calls into question the validity of AI checkers when evaluating stylistically distinct or formal prose.

Example 3: Hamlet by William Shakespeare

William Shakespeare’s Hamlet, believed to have been written around the year 1600, is one of the most well-known works in English literature. When a passage from this play was tested using an AI detection tool, the content was flagged as being 100% AI-generated.

This is despite the fact that Hamlet was written centuries before the advent of artificial intelligence. Its complex structure, formal language, and thematic depth are hallmarks of human authorship. However, AI detectors appear to misinterpret these characteristics as signals of machine-generated content.

Such results highlight how literary works with distinctive styles may confuse detection algorithms, especially when those algorithms rely on surface-level analysis rather than understanding the origins or historical context of a text.

Example 4: Classic Literature Misidentified: Moby-Dick

An excerpt from Moby-Dick by Herman Melville—first published in 1851—was passed through an AI checker. The result showed that 88.24% of the content was flagged as AI-generated.

Literary experts widely regard Moby-Dick as a key work in American literature. The novel’s dense prose, descriptive language, and use of metaphor may resemble AI-generated outputs, but again, the authorship is well-documented. This example suggests that complex, stylized writing from human authors may confuse AI detection algorithms , particularly when it mimics contemporary AI language patterns.

Example 5: Apple’s First iPhone Press Release Misclassified

To test modern writing, Apple’s original 2007 press release introducing the iPhone was submitted to a detector. The tool marked 89.77% of the press release as AI-generated.

This release is publicly archived and was produced by professional human writers within Apple’s communications team. Unlike classical texts or older documents, this case involved a modern piece written in corporate language—yet it was still misidentified.

This example points to another flaw: AI detectors may confuse structured, professional writing with machine-generated language , especially when clear formatting and marketing tones are used.

What These Results May Indicate?

The inconsistencies highlighted above raise important questions about the effectiveness of current AI detection technology. While these tools aim to solve the growing concern of AI-generated plagiarism and misinformation, they may also create false positives —flagging genuine human work as artificial.

Several key challenges appear to be contributing to this:

- Stylistic Similarity: Human-written content, especially formal or literary text, may resemble the patterns seen in AI-generated writing.

- Training Data Overlap: AI models are often trained on public domain works and online data, including religious texts, classic literature, and press releases. Detectors that rely on pattern-matching may incorrectly associate those texts with AI authorship.

- Statistical Limitations: Most detection tools operate based on probability models. They analyze token distribution and sentence structure rather than actual provenance or authorship.

- Lack of Contextual Awareness: Tools do not consider historical or authorial context, making them prone to error when evaluating famous or well-known works.

Broader Implications of Misclassification

These examples not only highlight technical shortcomings but also raise ethical and practical concerns. Students, journalists, and professionals may find themselves wrongly accused of using AI tools. Classic literature or public speeches could be incorrectly flagged in academic settings. Organizations might lose trust in automation due to false results.

The reliance on detection tools alone—without human review—may result in misjudgments, academic penalties, or rejection of legitimate content.

Moving Toward Better Solutions

While the current generation of AI detection tools appears limited in accuracy, the demand for identifying machine-generated content continues to grow. Moving forward, more nuanced solutions may be needed , including:

- Hybrid review systems that combine AI with expert human analysis

- Metadata-based detection that traces the creation source rather than analyzing the text alone

- Improved model transparency from detection tool providers

- Clear usage policies for AI-generated content in schools, workplaces, and media

Until such advancements occur, it is important to approach AI detection results with caution. These tools may assist in identifying suspicious content, but they should not serve as the sole judge of authorship.

Conclusion

AI content detection tools are being used widely in efforts to verify the originality of written work. However, these five examples—ranging from ancient texts to modern corporate materials—show how often such tools may misclassify content.

Whether dealing with literature, religious documents, or AI-generated paragraphs, the technology currently appears unable to consistently distinguish between human and machine writing. Until more accurate solutions are developed, results from AI detectors should be interpreted with critical thinking and contextual understanding, rather than being treated as definitive proof.

zfn9

zfn9