Working with large language models isn’t just about architecture anymore — it’s also about where and how you train them. If you’ve ever waited hours for your model to finish a single epoch or checked your cloud bill and wondered whether deep learning is only for those with deep pockets, then this will interest you. Training Hugging Face models on PyTorch/XLA TPUs changes the game for both speed and cost. Here’s how.

What Happens When Hugging Face Meets PyTorch/XLA on TPUs?

TPUs, or Tensor Processing Units, are Google’s answer to the growing demand for accelerated computing. Unlike GPUs, TPUs come with a different backend called XLA (Accelerated Linear Algebra), which speaks its own dialect of optimization. PyTorch/XLA acts as the bridge, translating PyTorch operations into something TPUs understand.

Now, bring Hugging Face into the mix. These models aren’t lightweight. BERT, T5, GPT-2 — they can balloon into billions of parameters. Traditionally, such a scale meant using pricey GPU clusters and long training times. But combine Hugging Face with PyTorch/XLA on TPUs, and you’ll notice two things: speed picks up, and the bills go down.

The Actual Speedup: What’s Really Faster?

Before the buzzwords blur the facts, let’s look at the actual performance. TPUs aren’t just faster for the sake of being faster. They’re structured differently. Think of them as high-speed conveyor belts instead of forklifts. They work best when the workload is batched and uniform, which, conveniently, is exactly what model training needs.

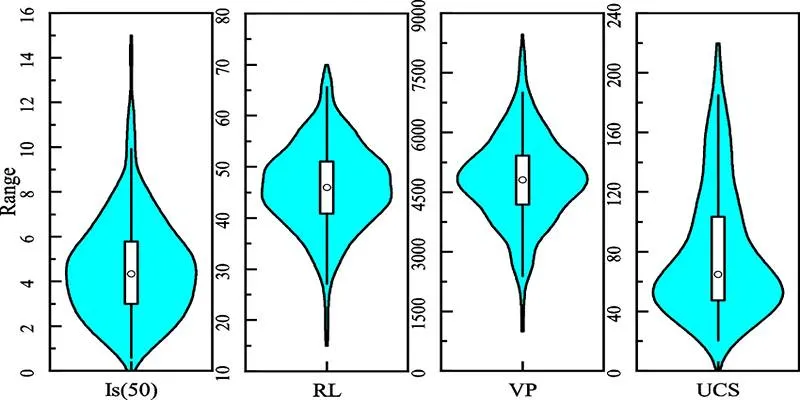

Key Performance Improvements

- Training time drops by as much as 40–60% on comparable datasets.

- Batch sizes can scale up without hitting memory constraints.

- Gradient accumulation becomes smoother due to better parallelism.

Take the same BERT-base model and train it on a TPU v3–8 with PyTorch/XLA, and you’ll wrap up in less than half the time it would’ve taken on a single A100 GPU — and without the GPU’s hourly cost.

Step-by-Step: How to Set Up Hugging Face on PyTorch/XLA TPUs

Setting this up is not plug-and-play, but it’s also not something you need a PhD for. Here’s how you get from zero to TPU-powered training, one step at a time.

Step 1: Choose the Right Environment

You’ll want a TPU-enabled VM from Google Cloud Platform (GCP). The most common setup involves either TPU v2 or v3 with a Debian-based environment. When setting up the VM, make sure to select a PyTorch-xla image, not just PyTorch.

Alternatively, you can spin up a TPU notebook directly from Google Colab with TPU runtime, though it’s better suited for smaller experiments.

Step 2: Install Hugging Face and PyTorch/XLA

Your environment needs three essentials:

- Hugging Face Transformers

torch_xlafor TPU operations- Datasets (if you’re pulling from the datasets library)

Install them with:

pip install transformers datasets

pip install torch==1.13.1 torch_xla==1.13 -f https:/storage.googleapis.com/libtorchxla-releases/wheels/tpuvm/torch_xla.html

Ensure versions align with TPU compatibility to avoid training crashes.

Step 3: Set Up the Model and Tokenizer

Pick your Hugging Face model — say bert-base-uncased — and load it as usual. The difference starts when sending the model to the device. Instead of the usual .cuda(), use .to(device), where device is xm.xla_device().

import torch_xla.core.xla_model as xm

from transformers import BertForSequenceClassification, BertTokenizer

device = xm.xla_device()

model = BertForSequenceClassification.from_pretrained('bert-base-uncased')

model.to(device)

Step 4: Wrap the Training Loop with XLA Tools

The training loop needs PyTorch/XLA utilities to sync across TPU cores and allow efficient data sharding.

Instead of DataLoader, use MpDeviceLoader. Wrap your training loop inside xm.optimizer_step(optimizer) rather than the typical optimizer.step().

from torch_xla.distributed.parallel_loader import MpDeviceLoader

train_loader = MpDeviceLoader(train_dataset, device)

for batch in train_loader:

optimizer.zero_grad()

outputs = model(**batch)

loss = outputs.loss

loss.backward()

xm.optimizer_step(optimizer)

This minor restructuring unlocks all the parallelism TPU offers without needing to re-architect your model.

Step 5: Multi-Core Training (Optional but Powerful)

TPU v3-8 offers 8 cores. If you want real speed, use them all. This means wrapping your training script with xmp.spawn, which runs training in parallel across all cores.

import torch_xla.distributed.xla_multiprocessing as xmp

def train_fn(index):

# Include steps 3 and 4 here

pass

xmp.spawn(train_fn, nprocs=8)

Each core gets its own process, training independently while syncing gradients behind the scenes. It feels like magic but runs like clockwork.

Where the Cost Advantage Comes From

This isn’t just about time. TPUs offer significant pricing efficiency. A TPU v3-8 on GCP costs less per hour than four A100 GPUs. But because of the speed advantage and better scaling, jobs finish sooner.

So while you might pay $8 per hour for a TPU and $20 for multiple GPUs, the real difference appears when you calculate the total cost per training run. Many find themselves cutting down expenses by 30–50%, especially when training models on large datasets or experimenting with multiple configurations.

Also worth noting — many TPU trials or community notebooks are either free or low-cost, making them ideal for prototyping before committing to larger projects.

Wrapping Up

Putting Hugging Face models on PyTorch/XLA with TPUs isn’t just about speed or cost — it’s about efficiency. You get the kind of performance that used to require expensive clusters, all while writing nearly the same code as before. With just a few adjustments to your training script and the right setup, you’re working smarter, not harder. And in machine learning, that’s a rare win.

So next time you’re staring at a progress bar that hasn’t moved in hours, remember — TPUs might be what gets it done faster and cheaper. Hope you find this article worth reading. Stay tuned for more interesting yet helpful guides.

zfn9

zfn9