Nexla’s integration with Nvidia NIM marks a significant leap in the development of artificial intelligence for businesses. This collaboration provides companies with more scalable AI data pipelines, enabling quick model iteration and easier implementation of ideas. Nexla’s no-code approach complements Nvidia’s advanced hardware and software, speeding up time to market for AI applications and simplifying data flow.

In today’s business landscape, fast, consistent, and scalable platforms are crucial as more companies adopt AI. Nexla streamlines data management, enhancing AI workflow efficiency across industries. Together, Nexla and Nvidia create an environment free of obstacles, fostering AI growth. The power of automation and smart infrastructure makes them a perfect fit for modern enterprises.

Nexla’s Role in Modern AI Workflow

Nexla provides automated solutions for creating and managing data pipelines, empowering companies with its no-code system. It simplifies access to unstructured and structured data from various sources. Nexla’s ability to interface with APIs, cloud platforms, databases, and more makes it ideal for enterprise-scale AI applications. It automatically recognizes information and adapts to changing schemas without human intervention, reducing the need for manual corrections or debugging.

Real-time transformation and data validation ensure every pipeline’s quality and integrity. Nexla’s built-in governance systems enforce compliance guidelines and security policies, maintaining data trust. Faster data availability, clearer inputs, and reusable logic blocks simplify development and assist AI teams. The platform enhances collaboration among analysts, data engineers, and machine learning engineers, allowing teams to focus on model design and deployment, thus increasing operational effectiveness and innovation speed.

Nvidia NIM’s Infrastructure Capabilities

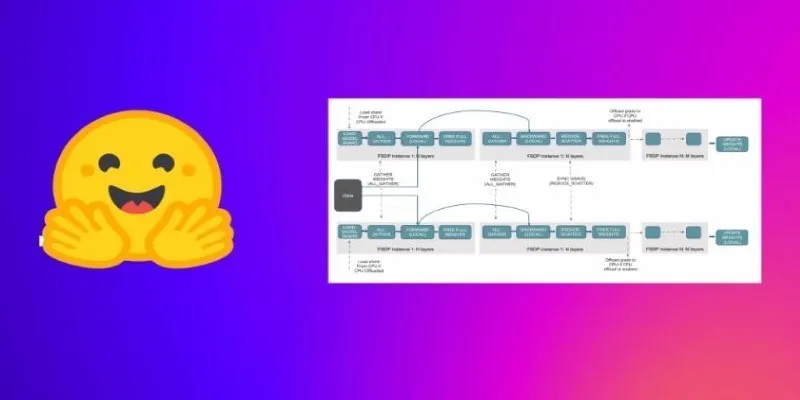

Nvidia NIM provides a robust infrastructure for deploying AI models at scale. It presents models as ideal containers, ready for use on-premises or in the cloud. These hardware-accelerated containers guarantee faster processing by leveraging Nvidia’s potent GPUs. NIM enables developers to manage performance, reliability, and deployment logistics. Its flexible containers support main frameworks, including TensorFlow, PyTorch, and ONNX, greatly reducing setup time with pre-built setups and scalable execution.

Nvidia NIM includes APIs and SDKs for seamless integration into corporate settings. These tools allow easy automation of testing, deployment, and updates across AI systems, ensuring models run efficiently without infrastructure impediments. NIM provides continuous performance and reliability for companies handling significant AI loads, allowing containerized models to run across hybrid and multi-cloud environments, thus speeding innovation and supporting modern AI needs.

Benefits of Integrating Nexla with Nvidia NIM

The combination of Nexla’s data management and Nvidia’s model deployment via NIM creates a seamless AI development environment. Nexla ensures data is clean, well-structured, and fully validated before reaching the model. Nvidia NIM handles GPU acceleration, container management, and model inference without human effort. High-quality data reduces development time and improves model accuracy. Nexla’s versatile data pipelines enable both batch and real- time processing.

Nvidia’s containerized architecture guarantees scalable and consistent running of AI models, streamlining processes for faster and more confident iteration. Data scientists no longer worry about low-level integration issues, and engineers gain access to pipeline health, model performance, and data accuracy. Together, Nexla and NIM offer continuous delivery, enabling regular implementation of changes and fostering more responsive and intelligent applications. This collaboration opens new possibilities for large-scale AI innovation in enterprises.

Use Cases Across Industries

Retail firms can leverage Nexla and Nvidia NIM to use real-time consumer data for demand forecasting. Nexla processes point-of-sale and inventory data for AI, while NIM-driven models optimize inventory and pricing strategies. In healthcare, the integration provides scalable, secure processing of patient records and diagnostic images. While NIM effectively utilizes medical AI models, Nexla ensures sensitive data is properly managed.

In finance, streaming transaction data fed into AI algorithms helps detect fraud. Nexla handles sensor data from factory floors in manufacturing, forwarding it to NIM models to predict equipment breakdowns. Logistics companies analyze route data and shipment trends for smarter delivery. Across all sectors, faster insights, improved efficiency, and reduced operational costs result. Nexla and Nvidia NIM empower companies to respond confidently to data-driven insights with real-time control over the entire AI lifecycle.

Streamlining AI Development Cycles

Creating AI models requires accurate data, modern infrastructure, and continuous improvements, all addressed by Nexla and Nvidia NIM. Nexla streamlines data collection, transformation, and governance from start to finish. NIM’s runtime environment allows models to be tested, deployed, and monitored. Teams no longer waste time troubleshooting deployment issues or repairing faulty data pipelines. Nexla promptly adapts to schema changes, while NIM ensures high availability and performance, promoting faster experimentation and feedback loops.

Machine learning teams can iterate on models rapidly with less overhead. Both systems simplify continuous integration and delivery management. Nexla reduces data lag by feeding clean data directly to NIM containers, offering developers reliable, scalable environments without needing extensive DevOps support. The entire cycle, from data intake to model serving, is shortened, allowing teams to focus on enhancing output and delivering business value.

Conclusion

Nexla’s partnership with Nvidia NIM signifies a pivotal moment for enterprise AI development. Creating and maintaining scalable AI data pipelines has become easier. Faster deployment, clearer data, and real-time performance of Nvidia NIM integration benefit teams in numerous ways. Businesses can now automate AI model implementation and reduce manual tasks. This powerful combination ensures AI initiatives deliver results swiftly and efficiently, freeing companies to innovate confidently. As AI adoption grows, Nexla and Nvidia NIM will be key enablers of future-ready intelligent systems.

zfn9

zfn9