Artificial Intelligence (AI) is revolutionizing industries, from healthcare to finance, by providing powerful predictive models. However, as these models grow in complexity, they often become “black boxes,” making it challenging for engineers to explain their decisions. This is where Explainable AI (XAI) becomes essential, offering transparency and accountability in machine learning (ML).

The Importance of Explainability in AI

AI models often function as black boxes, where data inputs generate predictions without revealing the underlying reasoning—particularly in deep learning and ensemble methods. While high accuracy is valuable, it’s not sufficient in situations where incorrect or biased decisions can have significant consequences, such as in healthcare, credit scoring, or hiring processes.

Explainability is crucial for engineers, as it helps identify when a model uses irrelevant or biased features, such as geographic location instead of medical indicators in health predictions. This insight can improve model design and prevent unintended harm.

Furthermore, trust is a key factor. Stakeholders and end-users are more likely to accept AI-driven recommendations when they understand the model’s reasoning. With growing regulatory pressure, engineers need to ensure automated decisions affecting individuals are explainable.

Techniques and Approaches in XAI

To make AI models explainable, engineers can choose from several techniques, categorized into interpretable models and post-hoc explanations.

Interpretable Models

These models, such as linear regression, decision trees, and rule-based systems, are inherently transparent, allowing engineers to see how features contribute to outcomes. However, they may lack performance on complex tasks.

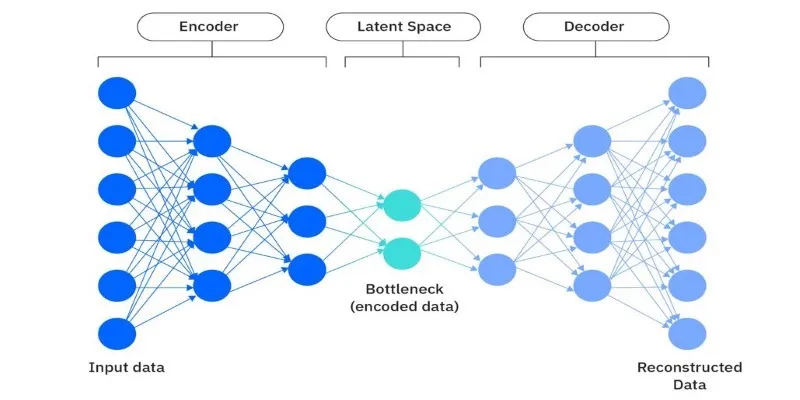

Post-hoc Explanations

For high-performing models like neural networks, post-hoc methods explain decisions after training. Techniques like SHAP (SHapley Additive exPlanations) and LIME (Local Interpretable Model-agnostic Explanations) are popular. SHAP uses cooperative game theory to determine feature contributions, while LIME approximates the model locally with a simpler surrogate. These methods provide insights into which inputs most influence predictions.

In image or text classification, visual techniques like heatmaps and saliency maps highlight influential parts of an image, while attention weight visualizations reveal impactful words in natural language processing.

Emerging Techniques

Causal explanation methods are gaining traction, exploring cause-and-effect relationships for actionable insights. These approaches help engineers evaluate whether a model’s decisions remain valid if inputs change significantly.

Challenges and Considerations for Engineers

Implementing XAI comes with challenges. Engineers must balance explainability and accuracy, as simpler models may not perform well on complex data, while opaque models excel in predictive power.

Audience expectations also vary. Technical teams may require detailed feature interactions, whereas end-users need simple, actionable explanations. Crafting clear yet accurate explanations can be complex.

Engineers should be cautious of overconfidence in explanations, as many post-hoc techniques provide approximations rather than exact reasoning. Recognizing these limitations helps avoid misinterpretation.

Scalability is another challenge. Explaining a few predictions is feasible, but real-time explanations across millions of decisions require efficient methods. Engineers must ensure chosen XAI approaches integrate well with production systems.

Finally, as XAI evolves, engineers must stay informed about new techniques and benchmarks, adjusting their approach as tools develop.

The Future of XAI for Engineers

XAI is transforming how AI and ML engineers develop models. As models become more powerful and integrated into decision-making, the demand for transparency will increase. Engineers proficient in XAI techniques are better equipped to create models that are not only accurate but also trustworthy and fair.

Future advancements in XAI are expected to improve explanation accuracy, scalability, and context awareness. Hybrid approaches combining the strengths of complex and interpretable models are gaining popularity. Advances in causal inference, human-in-the-loop systems, and standardized interpretability metrics will provide engineers with more tools.

Conclusion

Explainable Artificial Intelligence is an essential skill for AI and ML engineers. As machine learning impacts more decisions, the ability to explain predictions accurately is crucial. XAI fosters trust, improves reliability, and meets growing legal and ethical demands for transparency. It also aids engineers in refining models by exposing hidden biases or flaws. By integrating explainability into their workflow, engineers can build AI systems that earn confidence and serve people better, combining intelligence with clarity for impactful and responsible work.

zfn9

zfn9