Bringing generative AI projects out of the lab and into real-world use often feels slower and more frustrating than expected. Many teams build impressive proofs of concept, yet stumble when it comes to deploying those ideas at scale in production. One way to streamline this path is by applying first principles thinking—breaking down assumptions to understand the true nature of the problem and building solutions from the ground up. By approaching the production challenge in this way, teams can identify the right trade-offs, remove unnecessary complexity, and focus on what actually delivers value.

Why First Principles Matter in Generative AI Deployment

Generative AI (GenAI) prototypes often start with enthusiasm, creativity, and experimentation. This early phase is usually about showing what’s possible, demonstrating new capabilities, and proving that the idea has merit. The challenge comes later: production environments impose limits and requirements that prototypes rarely meet. Factors such as data privacy, latency, cost, monitoring, and integration with other systems suddenly matter a great deal.

Many teams try to bridge the gap by relying on what has worked elsewhere or applying standard software engineering practices. While these practices are useful, they can also obscure the fact that GenAI systems behave differently from traditional software. They generate probabilistic outputs, depend heavily on data quality, and incur significant computational expense. First principles thinking helps cut through inherited assumptions—asking why each component exists, what its purpose really is, and whether there is a simpler, better way to achieve the desired result.

Breaking Down the Problem with First Principles

The first step is to understand what your prototype actually proves. Often, a prototype is designed to demonstrate feasibility or explore potential user experiences, but it is not built to meet the demands of reliability, speed, or security. Instead of trying to turn the prototype itself into production code, consider it a concept to analyze. What exactly does it do that users value? Which parts can be simplified, swapped, or rebuilt to serve that same purpose?

Teams can break the system into its fundamental elements: the model, the data pipeline, the interface, and the infrastructure. For each element, ask what it really needs to do and why. Does the model have to generate responses in less than 200 milliseconds? Can a smaller fine-tuned model meet user needs at lower cost and latency? Does every input require passing through the same preprocessing steps, or are some of them redundant? Does the system even need real-time generation, or would batch processing suffice?

This kind of questioning can reveal where prototypes carry unnecessary baggage. Many proof-of-concept projects use large, general-purpose models because they are easy to access, but in production, this often becomes too expensive and slow. A smaller model trained on your specific domain might perform just as well for the task at hand. Similarly, a prototype might store every prompt and response in an unstructured log, but a production system needs structured logging and careful privacy controls. Seeing each component clearly enables you to make decisions that fit your specific case, rather than following what others have done.

Addressing Production Challenges with Simplicity

One of the biggest hurdles in moving GenAI systems to production is dealing with operational constraints: cost, monitoring, reliability, and compliance. Prototypes often assume unlimited resources and ignore failures. In production, you need to guarantee a certain level of uptime, handle malformed inputs gracefully, and operate within a fixed budget.

First principles thinking helps you ask the right questions here. Do you really need a separate instance of the model running for every request, or can you batch requests to save compute? Is it necessary to store every output indefinitely, or can you retain only key metadata to respect privacy laws? Do you need to build a custom monitoring stack, or can you adapt existing observability tools to track your model’s performance and detect drift?

Instead of adding layers of infrastructure to patch over problems, look for ways to simplify the system. A leaner, more focused design not only performs better but is easier to maintain. For example, some teams have found that by narrowing the model’s scope to a handful of key use cases, they can simultaneously reduce complexity and increase reliability. Others choose to design their pipelines so they can fall back to deterministic rules when the model fails, providing a graceful degradation instead of a hard failure. These are the kinds of insights that come from questioning assumptions and focusing on fundamentals.

Building a Clear Path to Scalability

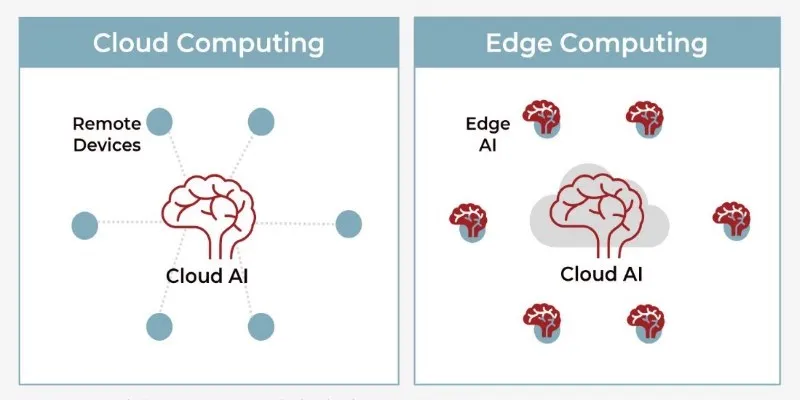

Scaling a generative AI system to handle real user traffic adds another layer of difficulty. First principles can help. Scalability is not just about adding servers or processing more data—it’s about understanding what actually scales well and what does not. Prototypes assume perfect conditions: clean data, consistent inputs, and predictable behavior. Production systems face a messy, unpredictable reality.

By examining the prototype from the ground up, you can identify which parts need to be more robust and which can stay lightweight. If a model depends on frequent retraining, you need a pipeline that reliably delivers updated data. If users ask unpredictable questions, fallback mechanisms or human review may be necessary. If demand spikes at certain times, plan capacity accordingly. These are predictable challenges when analyzing the system at its basic level.

It’s also worth questioning whether scaling horizontally is always the answer. Sometimes, investing in optimization—better prompts, more efficient models, improved caching—can reduce load without expanding infrastructure. Thinking in cause and effect, not convention, helps design scalable, efficient systems.

Conclusion

Moving generative AI from prototype to production can feel like a steep climb, but it doesn’t have to be. By applying first principles thinking, teams can strip away unexamined assumptions, clarify what actually matters, and make smarter decisions about how to design and deploy their systems. This approach leads to simpler, more reliable, and more cost-effective solutions that align with real-world needs. Generative AI has enormous potential, but fulfilling it requires clear thinking and deliberate choices—qualities that first principles help cultivate. Rather than treating production as a hurdle to clear, it becomes a process of understanding what truly works and building it properly from the start.

zfn9

zfn9