Automatic Speech Recognition (ASR) systems are integral to modern technology, from virtual assistants to transcription services. However, these systems require extensive transcribed speech data, which many languages and dialects lack. Consequently, ASR performance diminishes significantly for underrepresented languages and regions.

Researchers are exploring synthetic data solutions to bridge this gap. One promising method is voice conversion, which modifies a recorded voice to sound like a different speaker while preserving the spoken words. This raises an intriguing question: Can voice conversion enhance ASR accuracy in low-resource settings by enriching the available data?

The Challenge of Low-Resource ASR

Building a reliable ASR system demands vast amounts of recorded and labeled speech. While languages like English and Mandarin have extensive archives, low-resource ASR struggles due to sparse or narrowly representative data. Models trained on such limited material often fail when encountering diverse speakers, accents, and environments in real-world applications. Dialects, regional variants, and less-documented languages are particularly susceptible to misrecognition, as existing recordings may come from a small, homogenous group of speakers under ideal conditions.

Collecting more real-world data is costly and logistically challenging, involving the recruitment of diverse speakers and recording in natural settings. Even then, variations such as pitch, speed, or background conditions might remain underrepresented. Artificially augmenting the data offers a more efficient solution, and this is where voice conversion shows promise.

How Voice Conversion Works

Voice conversion alters the vocal identity of a recorded utterance without changing the spoken content. For instance, it can transform a recording by a young male speaker to sound like an older female speaker saying the same words. It adjusts vocal features like pitch, tone, timbre, and rhythm to match the desired target while preserving the linguistic message.

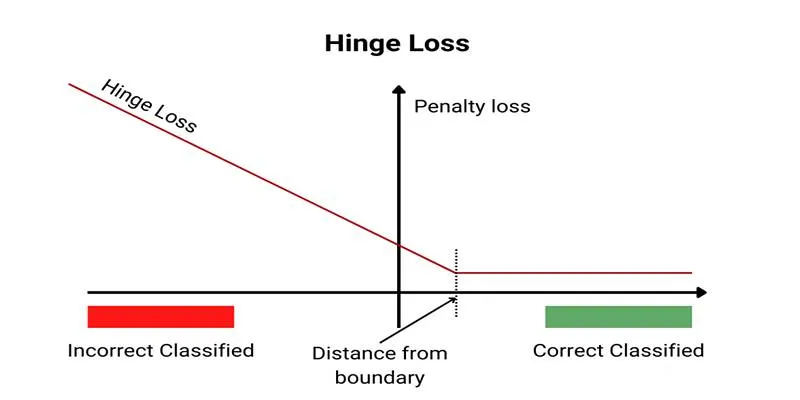

Technically, the system extracts features representing the content and separates them from those characterizing the speaker. It then resynthesizes the audio, combining the content with the target speaker’s characteristics. Traditional methods relied on statistical modeling, but newer approaches utilize deep learning to produce more natural-sounding results with fewer artifacts.

In ASR training, this means a single authentic recording can be expanded into several synthetic ones, each mimicking a different speaker profile. This creates a richer dataset and helps the model generalize beyond the limited real-world examples.

Benefits of Voice Conversion for Low-Resource ASR

Voice conversion significantly increases speaker diversity in low-resource ASR datasets. Many low-resource datasets feature the same few voices repeatedly, causing models trained this way to overfit and perform poorly on unfamiliar voices. By using voice conversion to generate varied synthetic voices from the same recordings, the dataset gains diversity without requiring new real-world contributions, better reflecting the range of speakers the system will encounter.

Another advantage is the adaptation to specific speaker traits. If a model needs to cater to a community with a particular accent or tone pattern but lacks data reflecting those traits, voice conversion can approximate them, exposing the model to more realistic conditions. For example, creating synthetic recordings that emulate speakers from different age groups or regional dialects can aid model adjustment.

Experiments show that ASR systems trained on a mix of real and voice-converted data achieve lower word error rates than those trained solely on real data, particularly in low-resource settings. The improvement degree depends on the naturalness and quality of the converted speech, with high-fidelity voice conversion systems creating training material that is both realistic and useful for learning.

Limitations and Future Directions

Voice conversion has its limits. Synthetic data is still synthetic, and excessive use can distort the model’s understanding. Overexposure to artificial voices, especially if they contain subtle errors or unnatural phrasing, can harm performance when tested against real-world speech. Balancing real and synthetic material is crucial to keeping the model grounded.

Another limitation stems from imperfect conversions. While technology is advancing, some converted samples may still sound artificial or lose subtle linguistic cues. This is critical in tonal languages or where prosody carries meaning. Improving the naturalness of converted speech remains a research priority.

Ethical considerations are essential as well. Using someone’s voice to generate synthetic data without consent or failing to disclose which parts of a dataset are artificial could lead to misuse or harm. Responsible use of voice conversion in ASR development requires transparency and safeguards.

Looking ahead, combining voice conversion with other data augmentation techniques such as tempo changes or background noise can yield even better results. Newer neural architectures and better speaker representation methods continue to enhance conversion quality, making it likely that voice conversion will become a standard part of low-resource ASR training.

Conclusion

Voice conversion offers a promising way to enhance ASR performance in low-resource settings by creating more diverse and representative training datasets. It can simulate a broader range of speakers and speaking styles, helping models handle the variety of real-world voices they encounter. While it cannot replace real data entirely, it provides a valuable supplement when authentic recordings are scarce. As technology advances, making conversions more natural and effective, this approach can extend ASR’s reach to more languages and dialects, promoting inclusivity in speech technology for underserved communities.

zfn9

zfn9