Artificial intelligence in 2025 sits at a fascinating crossroads. Machines are no longer confined to performing only repetitive, predefined tasks. Instead, we are witnessing the rise of agentic AI systems designed not just to act but to make decisions on their own within defined boundaries. This shift raises pressing questions about how much control we should delegate, who remains responsible when things go wrong, and how much independence these agents should have. As automation and autonomy advance together, a clearer conversation about accountability is becoming unavoidable, shaping the way we live and work in tangible ways.

From Automation to Autonomy: A Natural Evolution

For decades, automation was about efficiency. Machines were programmed to execute a set of instructions repeatedly, freeing humans from manual work and speeding up production. Think of factory robots, assembly lines, or even software bots processing invoices. These systems were dependable but rigid. They did not adapt, learn, or decide.

The next step—autonomy—is not just about speed or repetition but about adaptability. In 2025, AI agents are expected to function in more unpredictable environments. They process data in real time, weigh multiple options, and make context-driven choices. Autonomous drones deliver medical supplies through crowded urban spaces, negotiating obstacles without human guidance. Customer service agents hold conversations indistinguishable from human representatives, resolving disputes without escalation. This transition is powered by advancements in natural language understanding, reinforcement learning, and multi-modal data processing, allowing machines to interpret not just instructions but intent and context.

This progression does not mean automation has disappeared. Routine automation remains a foundation for many industries. But autonomy builds on it, expanding what machines can achieve without constant oversight. The secondary keyword—“responsibility in AI”—becomes relevant here because greater autonomy also means systems operate beyond the immediate grasp of their creators. This raises the question: Who answers when things go wrong?

The Weight of Accountability in a Machine-Driven World

Accountability has lagged behind the pace of technical progress. With traditional automation, the chain of responsibility was clear. A human programmed the task, and the system executed it. If there was a fault, engineers traced it back to a programming error or a maintenance lapse. With autonomous systems, accountability blurs. If an AI-powered vehicle makes a split-second decision that results in harm, was it the developer, the manufacturer, or the user at fault?

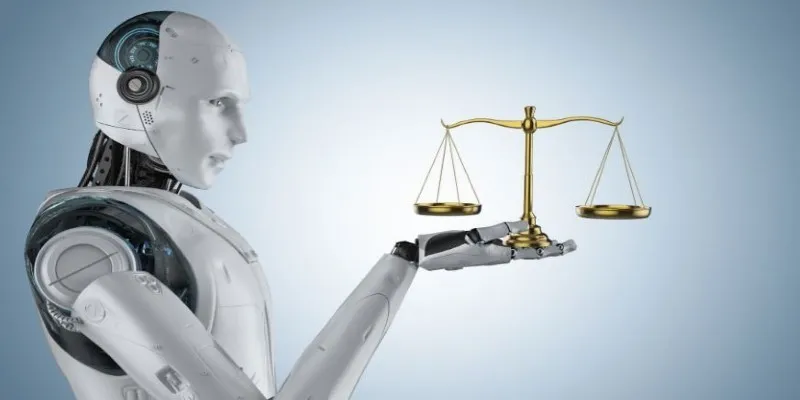

In 2025, laws and ethical frameworks are still evolving to catch up. Some governments are introducing clear rules that define liability for autonomous systems based on the degree of control retained by humans. Others are experimenting with the idea of “AI personhood” for certain agents, holding the systems themselves as accountable entities. Both approaches have their critics. Legal scholars warn that blaming an algorithm risks absolving human actors of oversight responsibilities. Technologists argue that assigning full liability to developers might stifle innovation and discourage risk-taking.

This tension is especially evident in sectors like healthcare, finance, and transportation, where decisions can have life-or-death consequences. Some organizations now build in layers of supervision, where an autonomous system’s decisions are audited in real time by human monitors. Others advocate for “explainable AI,” where every decision can be traced and understood after the fact. This growing insistence on transparency is not just about trust but about preserving a sense of responsibility in AI development and deployment.

Agentic AI: Beyond Tools, Toward Partners

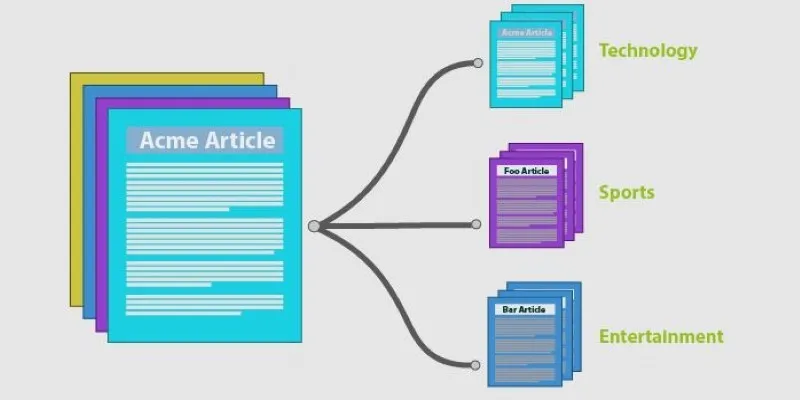

Agentic AI describes systems that behave more like independent agents than passive tools. Unlike standard automation, which requires human initiation, or even narrow autonomy, which sticks to a defined set of goals, agentic AI can pursue objectives, negotiate trade-offs, and adjust its strategies. In essence, these systems act more like partners than instruments.

In 2025, agentic AI is being explored in education, climate science, and creative industries. In education, AI tutors are developing personalized lesson plans based on student progress and mood, intervening when necessary without waiting for teacher input. In climate science, agentic systems analyze weather patterns, recommend policy interventions, and even coordinate responses between human teams across the globe. In creative industries, they co-author books, compose music, or even direct films while collaborating with human artists.

What sets agentic AI apart is the perception of intent. Even if these systems do not truly “understand” in a human sense, their ability to pursue goals and adjust tactics gives them the appearance of intent, which changes how humans relate to them. This dynamic makes clear accountability even more important, because once machines are seen as actors in their own right, the temptation to treat them as morally neutral tools diminishes. Developers and users need to remain conscious that these are still human-designed systems, and ultimate responsibility rests with us.

Preparing for the Next Phase

The combination of automation, autonomy, and agentic AI brings with it an unavoidable responsibility to define clear roles and safeguards. Relying on these systems without considering the implications risks eroding trust and introducing hazards. Transparency in decision-making processes, clear lines of accountability, and consistent oversight are all being discussed more actively in 2025 than ever before.

One trend gaining traction is embedding “accountability by design” into AI systems. This means engineers and policymakers work together from the outset to ensure systems log their decisions, offer explanations when queried, and have clear fail-safes. Another development is user education. Just as drivers must learn traffic laws before operating a car, users of autonomous systems are being trained to understand limitations and responsibilities.

Even as agentic AI becomes more sophisticated, it is still shaped by human priorities, biases, and intentions. The secondary keyword, “responsibility in AI,” remains central to these conversations. Without clear frameworks, the benefits of agentic AI could be overshadowed by misuse or unintended consequences. Maintaining a balance between granting machines autonomy and keeping humans accountable is a challenge that societies are now confronting head-on.

Conclusion

By 2025, automation has evolved into agentic AI—machines that act independently, resembling partners rather than tools. This shift demands a deeper focus on accountability and responsibility in AI to keep systems aligned with human intent. As autonomy grows, maintaining oversight while sharing control becomes critical. Balancing trust and responsibility will define our future relationship with intelligent machines, ensuring they serve human values without diminishing our role.

zfn9

zfn9