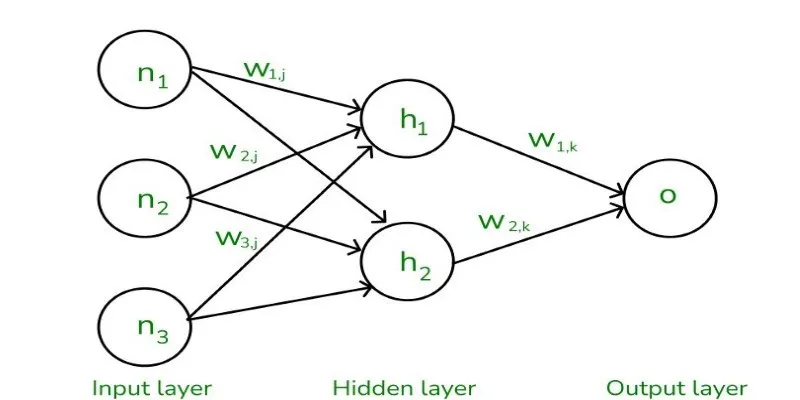

Managing large-scale Kubernetes infrastructure can be daunting due to its dynamic workloads, scaling needs, and constant configuration changes. AI and machine learning in Kubernetes play pivotal roles in automating decision-making, reducing errors, and streamlining processes. These technologies help teams forecast system behavior, allocate resources efficiently, and minimize downtime.

Through AI techniques, developers can resolve issues faster and enhance observability. Machine learning leverages historical trends to drive real-time improvements. By combining AI-powered Kubernetes tools with high standards, teams can achieve greater stability and efficiency. This guide explores how AI for Kubernetes management can simplify complexity and enable more intelligent operations.

Automating Kubernetes Operations with AI

Kubernetes environments empower AI to handle labor-intensive tasks such as configuration, scheduling, and scaling. Machine learning models suggest optimal settings based on data, reducing human error, and saving time. AI tools detect performance trends and early failure signals, enabling proactive responses to potential issues, thus achieving higher uptime and faster deployment cycles.

AI can dynamically adjust workloads based on traffic or usage trends, preventing over-provisioning and reducing costs. Machine learning further supports smart workload placement, ensuring every pod uses the most efficient node. AI also enhances alerts and notifications, providing actionable insights instead of overwhelming message floods. These features allow AI-powered Kubernetes tools to simplify infrastructure management, freeing engineers to focus on innovation rather than routine tasks.

Improving Observability and Monitoring Using AI

Observability is crucial in Kubernetes, where traditional monitoring tools may fall short. AI improves monitoring by detecting early trends and anomalies, learning from system behavior to enhance visibility and expedite root cause identification. Machine learning models analyze logs, metrics, and events to identify real risks, reducing false alarms.

AI integration makes visualization more intelligent, with dashboards adapting to show relevant insights for each use case. Developers no longer sift through logs manually, as AI highlights critical findings. Furthermore, AI-based observability technologies enhance performance tuning through forecasts based on historical trends. With AI and machine learning, monitoring becomes proactive rather than reactive, leading to improved system reliability and user experience.

Optimizing Resource Allocation Through Machine Learning

Kubernetes involves intricate resource allocation, where over-allocation wastes resources, and under-allocation causes performance issues. Machine learning addresses this by learning usage patterns and adapting resources accordingly. AI continuously monitors CPU, memory, and storage use, guiding future demands and workload balancing. ML models identify underutilized or overutilized pods, adjusting resource limits and requests in real-time to ensure seamless application performance.

Enhanced by AI, cluster autoscaling becomes predictive rather than reactive, minimizing delays and downtime. As AI learns from real data, resource throttling becomes more intelligent, optimizing cloud costs and usage. Teams using AI for Kubernetes management experience improved performance and reduced congestion, with intelligent resource tuning enabling faster and more controlled scaling.

Enhancing Security and Compliance with AI

Kubernetes security is complex and ever-evolving. AI enables faster threat monitoring and response by analyzing network traffic, logs, and API calls to detect unusual activity or potential breaches. It helps identify early threats like privilege overuse and misconfigurations. Machine learning methods analyze historical attacks to predict current risks, blocking traffic in real-time or notifying teams. AI also validates regulations and ensures compliance with industry standards, reducing risk and supporting audits.

AI insights improve role-based access restrictions, ensuring appropriate resource access. AI technologies provide regular security health reports, supporting proactive risk management. With AI-powered Kubernetes tools, security becomes adaptive and intelligent, supporting workload protection, trust-building, and compliance goals. Integrated AI transforms security into a continuous process rather than a reactive one.

Reducing Human Error and Complexity in Troubleshooting

Troubleshooting Kubernetes can be time-consuming due to its dynamic nature, leading to errors at various stages. AI alleviates this burden by recommending repairs based on historical event data. Machine learning models quickly analyze logs and metrics to find root causes, reducing the need for extensive searching by engineers.

AI learns from patterns to prevent recurring issues and can simulate results to evaluate different solutions. This allows teams to act more intelligently and swiftly. AI-powered debugging tools offer methodical guidance, enhancing accuracy and saving time, enabling even junior engineers to resolve problems effectively. Consequently, teams reduce downtime and service disruptions. AI and machine learning in Kubernetes help manage troubleshooting complexity, leading to better team output and more stable systems.

Supporting DevOps and Continuous Delivery with AI

DevOps processes in Kubernetes heavily rely on AI to ensure better pipelines and faster deployments. Machine learning identifies performance gaps and flaky tests, enhancing test automation for more reliable releases. AI suggests code improvements and predicts build faults, optimizing the delivery process with historical CI/CD data. Integration with version control systems provides additional context, allowing teams to identify issues before code reaches production.

AI tools can flag potential deployment risks and pause or abort unsafe releases, adding an extra layer of security to production environments. Post-deployment monitoring improves with real-time AI checks on app health, triggering rollbacks if necessary. These capabilities enable DevOps teams to deliver with greater confidence and speed. Using AI for Kubernetes management, teams bridge speed and stability, aligning development with operations and automating complex processes.

Conclusion

Artificial intelligence and machine learning are integral to effectively managing Kubernetes complexity. These tools safeguard workloads, enhance monitoring, and automate tasks, allowing teams to deploy confidently and troubleshoot efficiently. By leveraging AI and machine learning in Kubernetes, companies reduce errors and gain enhanced visibility. Predictive insights and intelligent resource allocation contribute to more efficient system operations. AI-powered Kubernetes tools scale with infrastructure, making even complex clusters easier to manage. Investing in AI for Kubernetes management is essential for achieving stability, performance, and growth in cloud-native environments.

zfn9

zfn9