Machine learning models don’t operate independently—they require precise configurations to achieve optimal performance. At the core of this fine-tuning process are hyperparameters, essential settings that influence a model’s learning, affecting its speed, accuracy, and generalization abilities. Unlike parameters, which a model learns during training, hyperparameters must be predefined. Incorrectly chosen hyperparameters can slow down training or reduce accuracy, whereas well-optimized ones can unlock a model’s full potential.

Understanding hyperparameters is crucial for anyone working with machine learning, as they significantly influence training time and performance. This article explores what hyperparameters are, their impact on learning, and how to optimize them for improved performance.

What Are Hyperparameters?

Hyperparameters are external settings that dictate how a machine learning model processes data. Unlike parameters—such as weights in a neural network—that are learned during training, hyperparameters are manually set beforehand. They serve as guidelines that control the model’s structure and behavior.

For instance, in a neural network, hyperparameters include the number of layers, neurons per layer, and the learning rate. In simpler models, such as decision trees, they might be tree depth or the minimum number of samples per leaf. These choices significantly impact the model’s learning capability and processing efficiency.

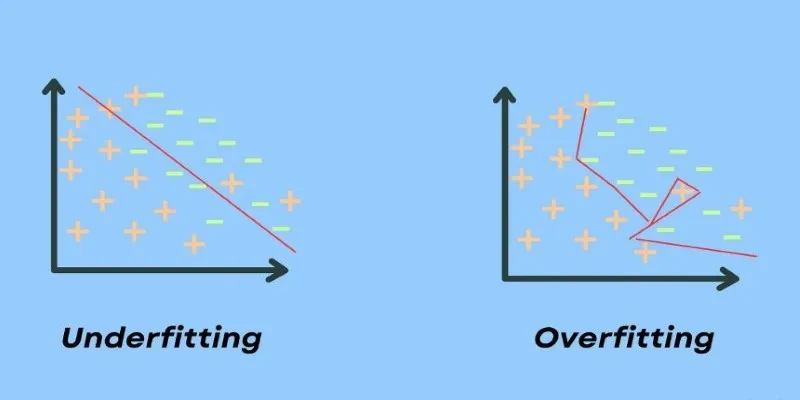

One of the most significant challenges in machine learning optimization is selecting the right hyperparameters. A poorly tuned model may overfit, memorizing training data without effectively handling new data. Conversely, excessively relaxed settings can lead to underfitting, failing to recognize important patterns. Achieving the right balance requires testing, experience, and sometimes automated tuning methods.

Why Hyperparameters Matter in Machine Learning

Hyperparameters are the key to tuning a model. Without correctly set parameters, a model may be inefficient, inaccurate, or even ineffective. Every machine learning algorithm, whether simple or complex, has hyperparameters that govern its performance.

A critical area impacted by hyperparameters is the speed-accuracy trade-off. A high learning rate might speed up training but lead to unstable learning, missing important patterns. A low learning rate stabilizes training but may take longer to converge. Therefore, tuning must strike the right balance.

Model complexity is another crucial aspect. Deep neural networks can have hundreds of hyperparameters, from activation functions in layers to optimizer settings that adjust weights during training. Even simple models, like linear regression, have hyperparameters, such as regularization strength, to prevent overfitting.

Hyperparameters affect more than just training; they also influence how well a model generalizes to unseen data. If a model is too finely tuned to the training data, it may perform poorly on real-world data. Techniques like cross-validation are used to test different hyperparameter settings before finalizing a model.

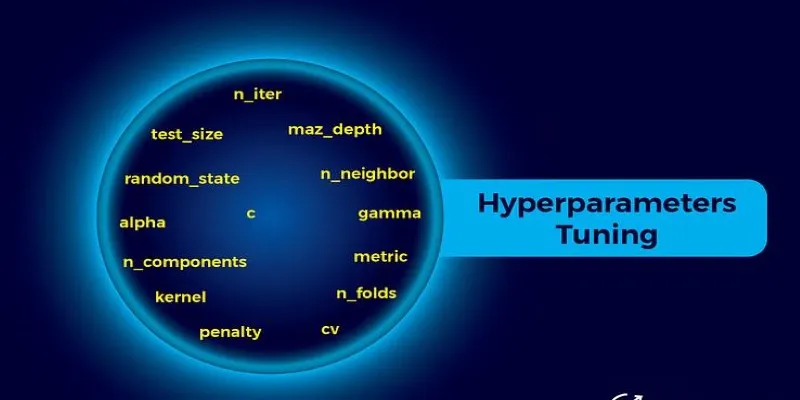

Common Hyperparameters and Their Impact

Different machine learning models have various hyperparameters that influence their performance. Here are some commonly used ones and their effects on learning:

Learning Rate: Determines how quickly a model updates its parameters. A high learning rate can speed up training but may lead to instability, while a low learning rate ensures steady progress but takes longer to converge.

Batch Size: Refers to the number of samples processed before updating the model. A smaller batch size allows for more frequent updates, but too small a size can make training noisy. A larger batch size provides stability but requires more memory.

Number of Epochs: Defines how many times the model goes through the training dataset. Too many epochs can cause overfitting, while too few may lead to underfitting.

Regularization Strength: Techniques like L1 and L2 regularization help prevent overfitting by adding penalties to large weights. Choosing the right regularization setting ensures the model generalizes well.

Number of Layers and Neurons: Deeper architectures with more neurons per layer can capture complex patterns in neural networks but require more data and computation to train effectively.

Tuning these hyperparameters is crucial for improving model accuracy and efficiency. The ideal values vary based on the dataset and the problem at hand, making experimentation an essential part of machine learning optimization.

Optimizing Hyperparameters for Better Performance

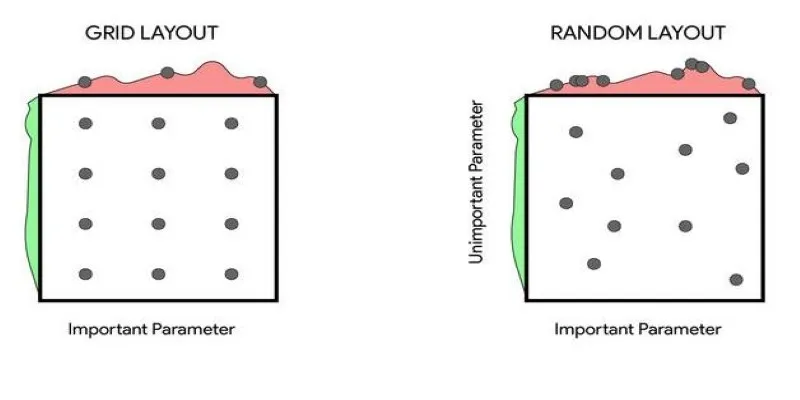

Finding the best hyperparameters isn’t a one-size-fits-all task. It involves testing different values, running experiments, and comparing results. This process, known as hyperparameter tuning, can be done in multiple ways.

One common method is grid search, where a range of values is systematically tested to find the best combination. However, grid search can be slow, especially for complex models with many hyperparameters. Another approach is random search, which selects random hyperparameter combinations to test, often yielding good results with less computation.

More advanced techniques include Bayesian optimization and genetic algorithms, which use past performance to predict better hyperparameter settings. These methods reduce the number of trials needed to find optimal values.

Automated hyperparameter tuning tools, such as Google’s AutoML or Hyperopt, eliminate the guesswork in this process. They analyze performance and adjust settings dynamically, making machine learning optimization faster and more efficient.

Choosing the right hyperparameters also depends on the dataset and the problem being solved. What works well for image recognition might not be ideal for text analysis. Experimentation, validation, and fine-tuning are essential to getting the most out of machine learning models.

Conclusion

Hyperparameters are the hidden levers that shape how machine learning models learn and perform. Getting them right means striking a balance between efficiency and accuracy, avoiding overfitting while ensuring the model captures meaningful patterns. Whether it’s tweaking the learning rate, adjusting the number of layers, or selecting the right batch size, small changes can make a big difference. While hyperparameter tuning may seem complex, it’s a necessary step in building reliable, high-performing models. With the right approach—whether manual tuning or automated tools—anyone can optimize hyperparameters and unlock the full potential of machine learning models.

zfn9

zfn9