SmolAgents were conceived as lightweight, easily comprehensible AI agents, capable of executing real-world tasks based on language inputs. Yet, until recently, they lacked the ability to perceive their surroundings visually.

These AI agents relied solely on structured inputs or pre-defined conditions, necessitating meticulous planning for each task. However, the introduction of visual input has granted them a newfound level of autonomy, making them more practical and responsive in unpredictable environments.

The Evolution of SmolAgents: From Language to Perception

Previously operating in a logic-only world, SmolAgents could plan actions, react to goals, and solve problems in a step-by-step manner. However, they were oblivious to their environment’s appearance.

With the addition of visual input, a SmolAgent’s perception of the world undergoes a transformation. Instead of relying on structured instructions, it can analyze an image—a screenshot of a web page, for instance—and determine its next action based on what it sees.

Despite this significant upgrade, SmolAgents retain their compact, fast, and transparent nature. The only change is their newfound ability to interpret their environment visually and adapt accordingly.

The Mechanism of Visual Input in a SmolAgent

To facilitate visual perception, SmolAgents employ a vision-language model that accepts an image as input and generates a textual response. This mechanism allows the agent to perceive changes and possibilities, thereby making the system more reliable and flexible.

The Significance of the Visual Input Upgrade

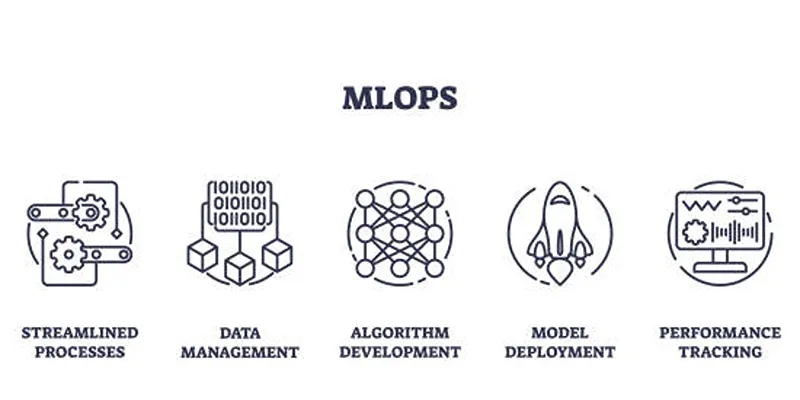

Integrating visual input into SmolAgents addresses several challenges. It eradicates the fragility resulting from inflexible hardcoded assumptions and allows for faster iteration and broader usability. It also offers traceability and transparency, which are crucial for debugging, improvement, and gaining trust.

In a broader sense, this advancement signifies a shift towards more grounded AI—systems that respond to their surroundings rather than just operate in the abstract. The addition of sight to SmolAgents is not about granting them omniscience or complex reasoning abilities, but about enhancing their awareness to function smoothly in practical settings.

The Future of SmolAgents with Vision

The addition of sight paves the way for further improvements such as continuous observation and visual memory. While these advancements present significant benefits, maintaining the simplicity and practicality of SmolAgents will be a challenge.

Moreover, ethical and privacy considerations will become increasingly important as viewing interfaces could raise concerns. It’s crucial for developers to clearly communicate what is seen, where it goes, and how it’s used.

Conclusion

The integration of sight marks a meaningful shift for SmolAgents, transforming them from simple tools to more intelligent and capable agents. While not flawless, SmolAgents have become far more useful, proving that small models, when equipped with the right tools, can effectively handle real-world tasks.

zfn9

zfn9