Machine learning today involves more than just training models; it’s about managing the entire workflow. As datasets grow and experiments increase, tools like MLflow become essential for efficiently tracking, versioning, and deploying models. However, MLflow works best when paired with scalable infrastructure, and that’s where Google Cloud Platform (GCP) excels.

GCP offers seamless integration with tools like Cloud Storage, Vertex AI, and IAM, making it a natural fit. This guide provides a hands-on walkthrough to help you confidently set up MLflow on GCP and take full control of your machine learning lifecycle.

Why Choose MLflow and GCP?

Before diving into the technical setup, it’s important to understand why MLflow fits so well within GCP’s ecosystem. MLflow is an open-source platform designed to manage the end-to-end machine learning lifecycle. It includes four main components: tracking, projects, models, and registry. While these features work great locally, cloud infrastructure becomes vital for team collaboration and multi-environment scalability.

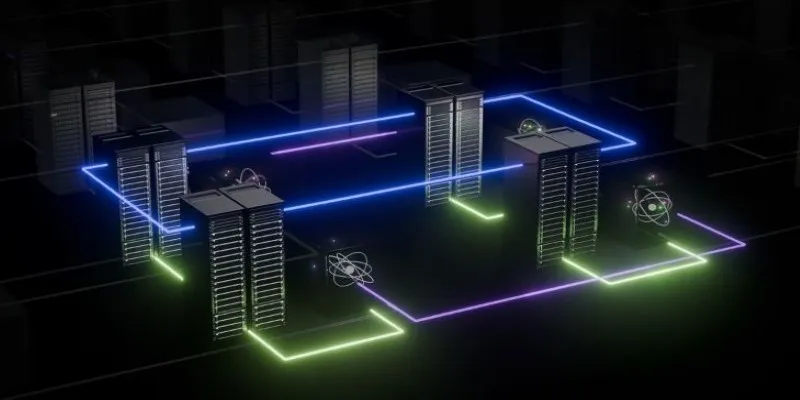

GCP offers a powerful infrastructure for running MLflow. Cloud Storage serves as an ideal place to store experiment artifacts like models and logs. Cloud SQL provides a reliable backend database for tracking metadata, ensuring experiment history is well maintained. With Identity and Access Management (IAM), teams can apply fine-grained access controls for security. Deploying the MLflow tracking server on Compute Engine or Kubernetes Engine allows users to scale operations efficiently while maintaining full control over performance and resource allocation.

Understanding how to set up MLflow on GCP means you’re creating a foundation that can grow with your project—from solo tinkering to enterprise deployments. The flexibility here is key. GCP doesn’t force you into one model; instead, it provides modular pieces you can arrange however you need.

Step-by-Step Setup: From Local to Cloud

To run MLflow effectively on GCP, you need three essential components: a backend store for metadata, an artifact store for experiment outputs, and a tracking server that powers the UI and API. These components map directly to services within Google Cloud, making the setup straightforward once you understand the flow.

Start by creating a Cloud Storage bucket, which acts as your artifact store. This is where MLflow will save model files, logs, and any other outputs tied to your experiments. Choose a clear name and enable uniform bucket-level access for simplicity. Assign specific IAM roles to the service account that will handle uploads and downloads—this helps control access and maintain security.

Next, set up a Cloud SQL instance using either PostgreSQL or MySQL. This will serve as the backend store, where MLflow logs run parameters, metrics, and metadata. Create a separate database, user, and password, and ensure private IP access is turned on for tighter control. This ensures that only trusted components within your network can interact with it.

Then, deploy the MLflow tracking server using Compute Engine. Select a virtual machine with sufficient resources, install Python and MLflow, and configure it to point to your Cloud SQL database and the Cloud Storage bucket. Ensure the server’s service account has the necessary permissions to access both services.

Alternatively, use GKE to deploy a containerized version of MLflow. With Kubernetes, you gain flexibility in scaling, managing secrets, and automating deployment using Helm charts. Once everything is wired together, you’ll have a browser-accessible MLflow dashboard backed by Google Cloud’s powerful infrastructure—giving you the full capability of MLflow, but now in a scalable, production-ready environment.

Enhancing Security and Maintainability

Security and long-term maintenance are crucial when transitioning MLflow into production on GCP. Without solid protections and automation, a helpful tool can quickly become a liability.

To secure your setup, begin with SSL for your Cloud SQL instance—GCP allows you to enforce encrypted connections easily, protecting your metadata in transit. For the tracking server, run it behind a reverse proxy like NGINX to handle SSL, and optionally deploy an Identity-Aware Proxy for user-level access control. Add firewall rules to restrict network access.

Your artifact store (Cloud Storage) also needs care. Set up lifecycle rules to automatically archive or delete outdated experiment logs, which helps manage storage costs. Enable Audit Logs to keep track of access activities.

IAM roles should be minimal. Don’t assign broad permissions to your MLflow server—create a dedicated service account with access only to required resources. This minimizes risk and improves visibility.

Also, version your deployment using Docker or virtual environments to tie experiment logs to code and package versions. Finally, automate everything with Terraform or Deployment Manager. It’s the best way to ensure consistency and reduce manual errors as your team or infrastructure grows.

From Tracking to Production

Once MLflow is running on GCP, the next step is integrating it into your training and deployment workflows. By setting the MLflow tracking URI to your cloud server, you can log experiments directly from any environment—local scripts, AI Notebooks, or remote clusters. The Python API makes it easy to track parameters, metrics, and artifacts in one centralized place.

For deployment, models stored in the registry can be served using MLflow’s built-in REST API or exported to Vertex AI or Cloud Run. This gives you flexibility—go fully managed or build a custom deployment path. You can integrate BigQuery for storage, Pub/Sub for triggering pipelines, or Dataflow for transformations.

What makes MLflow on GCP so effective is its modularity. You’re not locked into a rigid setup. Instead, you get a reproducible, auditable system that evolves with your needs—without losing sight of collaboration or control.

Conclusion

Setting up MLflow on GCP provides the structure and flexibility needed to manage machine learning workflows at scale. With proper configuration, you gain reliable tracking, secure artifact storage, and smooth team collaboration. GCP’s integrated tools make the process more efficient without locking you into rigid systems. Whether you’re a solo developer or part of a larger team, this setup empowers you to focus on building and improving models—confident that the infrastructure will support you every step of the way.

zfn9

zfn9