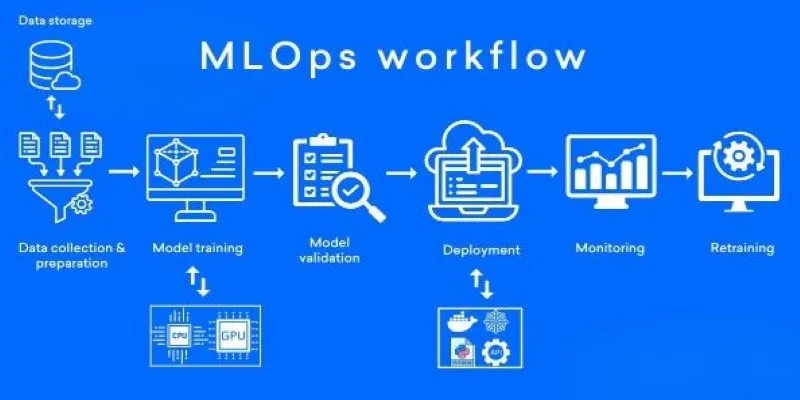

Machine learning has transitioned from mere experimentation to becoming an integral part of business workflows. Today, teams need more than just training a model—they manage the full lifecycle, including deploying, monitoring, and improving models at scale. This is where MLOps comes into play, merging software engineering practices with machine learning.

One of the practical tools aiding in the adoption of MLOps without the need to revamp infrastructure is Amazon SageMaker. As a managed platform, it allows developers and data scientists to efficiently build, train, deploy, and maintain models. Let’s delve into how SageMaker supports MLOps and why it’s a valuable choice.

What is Amazon SageMaker?

Amazon SageMaker is a fully managed service from AWS that simplifies machine learning projects by handling much of the heavy lifting. Instead of setting up servers, worrying about scaling, or writing deployment scripts, SageMaker offers a suite of tools for each stage of the machine learning lifecycle.

Its environment supports everything from simple experimentation with notebooks to orchestrated pipelines and production-ready endpoints. For teams embracing MLOps principles, SageMaker bridges development and operations, making it easier to automate workflows and track models through various stages.

MLOps Challenges and SageMaker’s Approach

MLOps, or machine learning operations, addresses common challenges such as fragmented workflows, reproducibility issues, deployment difficulties, and inadequate monitoring. Models may perform well in development but falter in production with real-world data. Monitoring drifts, retraining models, and managing versions can become cumbersome without the right tools. SageMaker tackles these challenges with integrated features tailored for each stage.

For training, SageMaker provides scalable managed infrastructure, eliminating the need for teams to manage compute resources. Training jobs are easily tracked and reproduced with versioned configurations. For deployment, SageMaker offers endpoints for low-latency predictions with built-in scaling. Its Model Monitor automatically tracks data quality and detects drift, prompting retraining jobs as needed.

The platform supports CI/CD for machine learning pipelines, enabling reliable testing and deployment of changes to data, code, or configurations. This is a key MLOps component that can be tricky to implement without dedicated infrastructure. SageMaker Pipelines offers this capability to AWS users, allowing teams to define, test, and run workflows with minimal friction.

Key Features Supporting MLOps Workflows

Amazon SageMaker includes several features that align with MLOps needs:

- SageMaker Studio: A web-based IDE where teams can collaborate on notebooks, experiments, and pipelines. Studio tracks work, supports real-time editing, and facilitates sharing and reproducing results.

-

SageMaker Experiments: This feature tracks models, hyperparameters, and datasets, allowing comparison of runs to identify successful configurations. This enhances transparency, saves time, and simplifies debugging.

-

Deployment with SageMaker Endpoints: These endpoints can scale to meet demand and allow multiple model versions to be tested using A/B testing or shadow deployments.

-

SageMaker Model Monitor: It automatically checks for concept drift and data integrity issues, alerting teams to retrain, adjust parameters, or investigate anomalies.

-

SageMaker Pipelines: Automates workflows by defining steps like preprocessing, training, validation, and deployment, ensuring consistent execution for updates and seamless integration into CI/CD pipelines.

Why Teams Choose SageMaker for MLOps

Teams adopt SageMaker because it reduces the operational burden of managing machine learning infrastructure. By leveraging AWS’s managed services, teams save time and focus on improving models instead of setup and maintenance.

SageMaker also scales effectively, adapting from small dataset training to serving millions of predictions daily. This elasticity is challenging to achieve in self-hosted environments without significant investment.

Collaboration is streamlined with SageMaker Studio and Experiments, enabling team contributions, change tracking, and maintaining a clear history of testing and deployments. This aligns with MLOps principles akin to traditional software development, where collaboration and version control are standard.

Finally, SageMaker integrates seamlessly with the AWS ecosystem. Many teams store data on S3, use Lambda for serverless functions, and rely on CloudWatch for monitoring. SageMaker fits naturally within this environment, reducing the need for separate systems.

Conclusion

Amazon SageMaker offers a practical path for teams to embrace MLOps without rebuilding infrastructure. By combining managed training infrastructure, scalable deployment, automated monitoring, and reproducible workflows, it addresses many challenges associated with moving machine learning from research into production. Its integrated tools foster effective collaboration, maintain reliable pipelines, and ensure models perform well as data evolves. For organizations aiming to leverage machine learning in everyday operations, SageMaker provides a streamlined approach with reduced overhead and increased confidence. When reliability and scalability are crucial, many choose SageMaker to efficiently manage the lifecycle of their models.

zfn9

zfn9